Our Roadmap for Privacy-First Conversational AI

In September 2023, we released the first version of BlindChat, our confidential Conversational AI. We were delighted with the collective response to the launch: BlindChat has been gaining traction on Hugging Face over the past few weeks, and we've had more visitors than ever before

But this local, fully in-browser Conversational AI is just the first step to offering an open-source alternative to AI Assistant. We know that the only way to achieve this is to deploy state-of-the-art LLMs with our privacy-first approach. Here is our plan to do just that.

Our products

We want to provide in-browser and private Conversational AI, allowing users to access the power of LLMs with zero set-up while having guarantees their most confidential data is not at risk.

We have recently launched two complementary open-source projects to democratize privacy-friendly AI.

- BlindLlama: a framework that aims at providing confidential and verifiable AI APIs. When consuming AI models hosted by BlindLlama, users have guarantees that not even the AI provider, for instance, us, can see their data, even though we manage the infrastructure. This is made possible thanks to the use of enclaves, which are confidential and verifiable environments that guarantee not even software admins can read users’ data.

More information about how we make data confidential and provide technical proof that these privacy controls are in place through a Root of Trust mechanism with TPMs can be found in our docs and whitepaper.

- BlindChat: this is a framework to provide fully in-browser and private Conversational AI applications. It provides two main modes to ensure privacy:

1) Local: a local model is sent to the user’s device

2) Enclave: data is sent to a remote enclave, where an AI model is served by BlindLlama.

BlindChat serves end users seeking private Conversational AI, such as lawyers and accountants, and AI providers offering confidential AI solutions. BlindLlama is designed for AI providers and developers who want to integrate AI without compromising their data privacy.

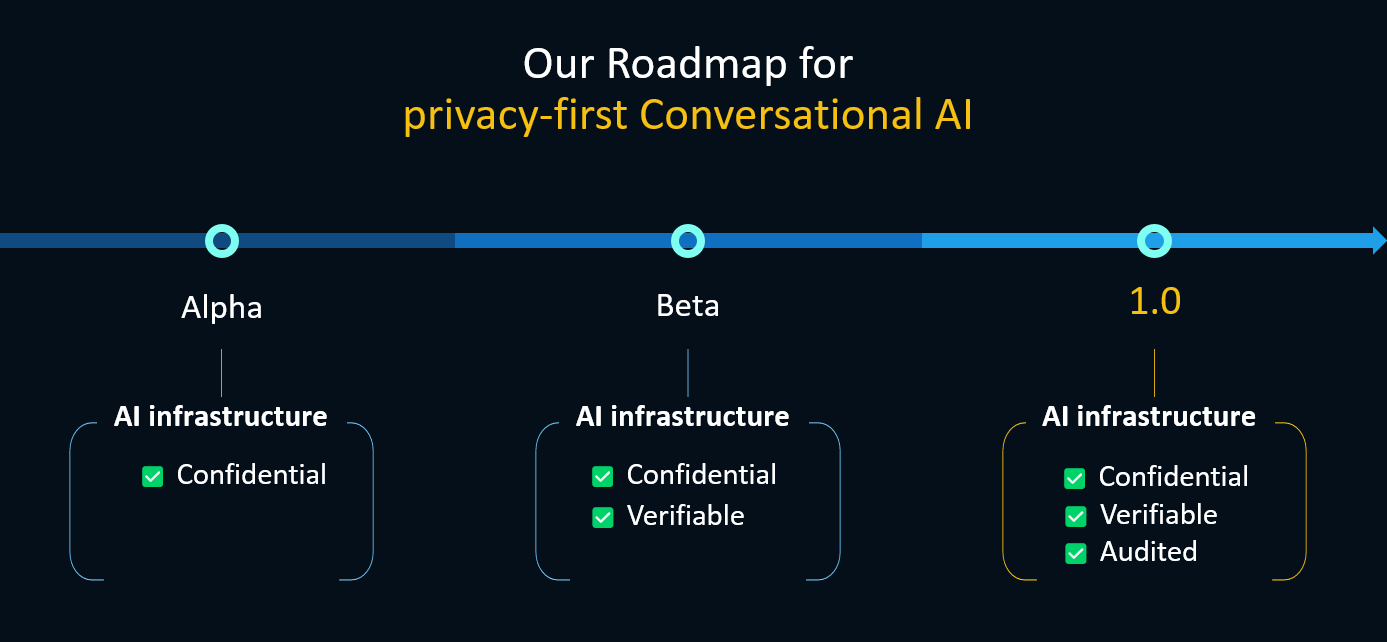

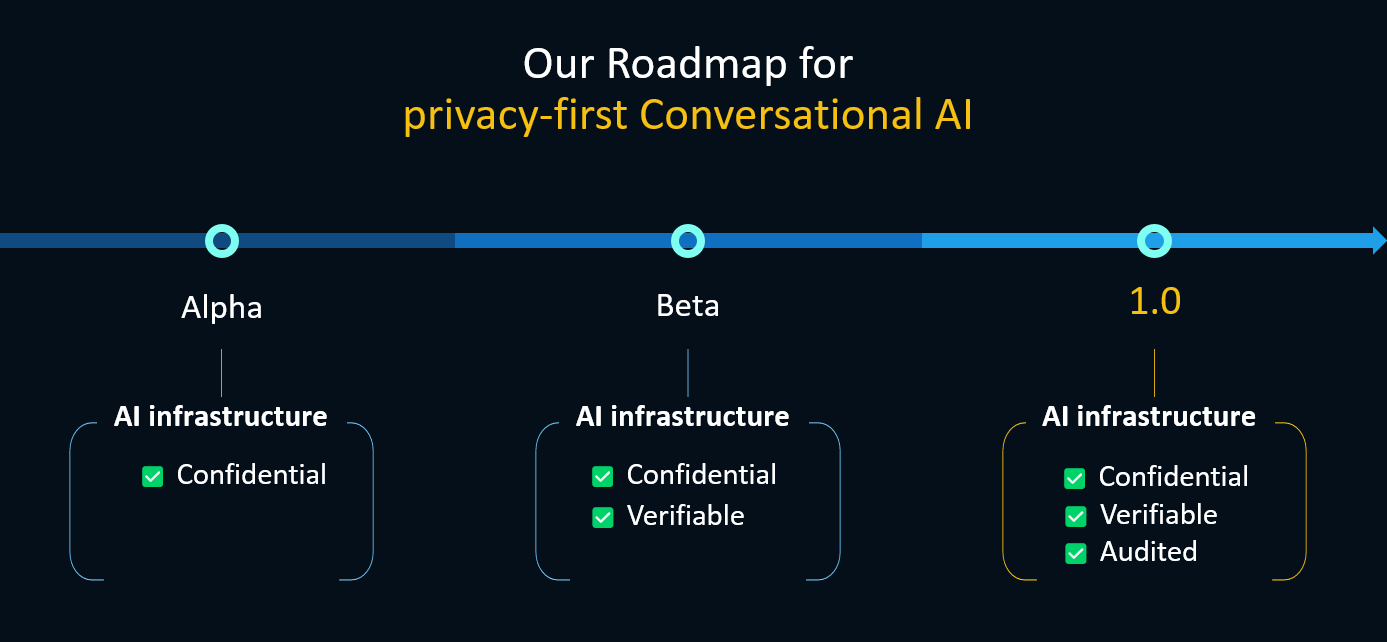

Our roadmap

We are focusing this coming year on making BlindChat the first fully private and in-browser Conversational AI solution so end users can leverage the best AI models without privacy concerns.

BlindChat currently enables you to have fully private Conversational AI through BlindChat Local, aka running local models in your browser. However, it may not provide the best balance between ease of use, model capability, and privacy.

Local models on devices are rather small, which limits the capabilities of the model, and puts a high strain on users in terms of computing, bandwidth, and memory required to run the model locally.

That is why we are working on BlindChat Enclave, a fully managed Conversational AI solution running in a browser, which ensures data privacy.

BlindChat Enclave has three stages:

Alpha

At the Alpha stage, we will connect BlindChat to BlindLlama Alpha. BlindLlama Alpha is a version of BlindLlama where AI models are served in hardened environments, but no verification mechanism is provided through a Root of trust with TPMs.

In practice, this means that while your data is not accessible to us: even if our software admins are compromised or malicious, there is not yet a way for users to have technical proof that such protections are in place. We do commit to never train, see, or expose your data, as we outline in our Privacy Policy.

This is still better than most AI providers, as they often have complex Privacy Policies, which may mean users are not aware that their data is being used to improve their models. Where data is not shared with the AI provider, the product may be downgraded.

However, our objective is to provide a transparent system that will actually provide technical and cryptographic proof that users’ data sent to us always remains private.

Beta

That is why at the Beta stage, we will provide a confidential AI system with full verifiability (by having measurements of the whole stack and traceability measures).

We will provide attested TLS to ensure users are talking to an authentic enclave and that only this secure environment will have the decryption key and analyze data. Not even our admins can access the data.

While the whole solution will be working end-to-end at this stage, we want to go further in terms of security and provide the strongest guarantees. BlindLlama is an open-source project and can be audited at any time but we want to have our solution audited by independent security experts before moving to the next phase.

1.0 launch

The 1.0 stage will be reached after our stack is audited by independent security experts.

At this stage, our AI infrastructure will be further hardened in terms of security to ensure that we cannot access users' data, and these checks will be technically verifiable.

These claims will be backed by an audit to ensure we are implementing state-of-the-art security and privacy measures.

You can follow BlindLlama's progress here, which fuels BlindChat Enclave

Extra features

In parallel, we are planning to enhance BlindChat with features such as:

- RAG with local documents in the browser to allow users to upload their PDF, code, etc., and interact with it privately

- Confidential speech-to-text with a local Whisper model

- Confidential web search

- and so on… you can find more information on BlindChat feature roadmap here

Conclusion

We have been on a long journey to get to this point, but we are still excited to accomplish what we initially set out to do: democratize privacy-friendly AI!

We hope our project will be able to unlock the use of AI in privacy-sensitive settings. Your feedback is key to our planning process. Let us know what you think on GitHub on Discord, or reach out to us.