Attacks on AI Models: Prompt Injection vs. Supply Chain Poisoning

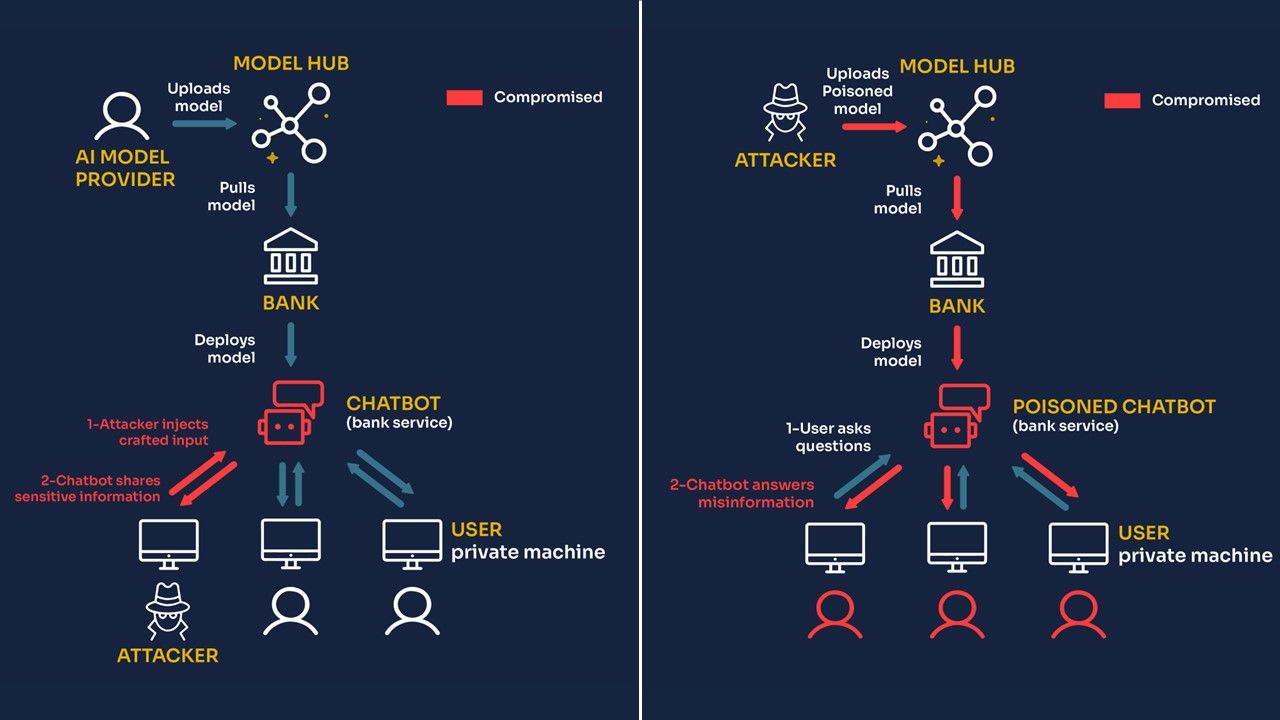

Comparison of prompt injection & supply chain poisoning attacks on AI models, with a focus on a bank assistant. Prompt injection has a limited impact on individual sessions, while supply chain poisoning affects the entire supply chain, posing severe risks.

With the massive adoption of AI models like GPT, concerns have emerged regarding transparency and safety. Indeed, attackers have performed various attempts to exploit the AI model ecosystem vulnerabilities. In this article, we compare two attacks on AI models: prompt injection (most discussed today) and supply chain poisoning (which we highlighted recently). Through the running example of a bank assistant Chatbot, we explore their real-life consequences and highlight why supply chain poisoning is the most concerning attack.

Key Takeaways:

- Prompt injection attacks are performed by a user intentionally and usually only affect their own isolated session with the model, so there is a limited impact

- supply chain poisoning attacks are performed by external attackers unknowingly to other users and affect the whole supply chain

- supply chain poisoning is the most harmful attack, as it impacts all end-users of AI models

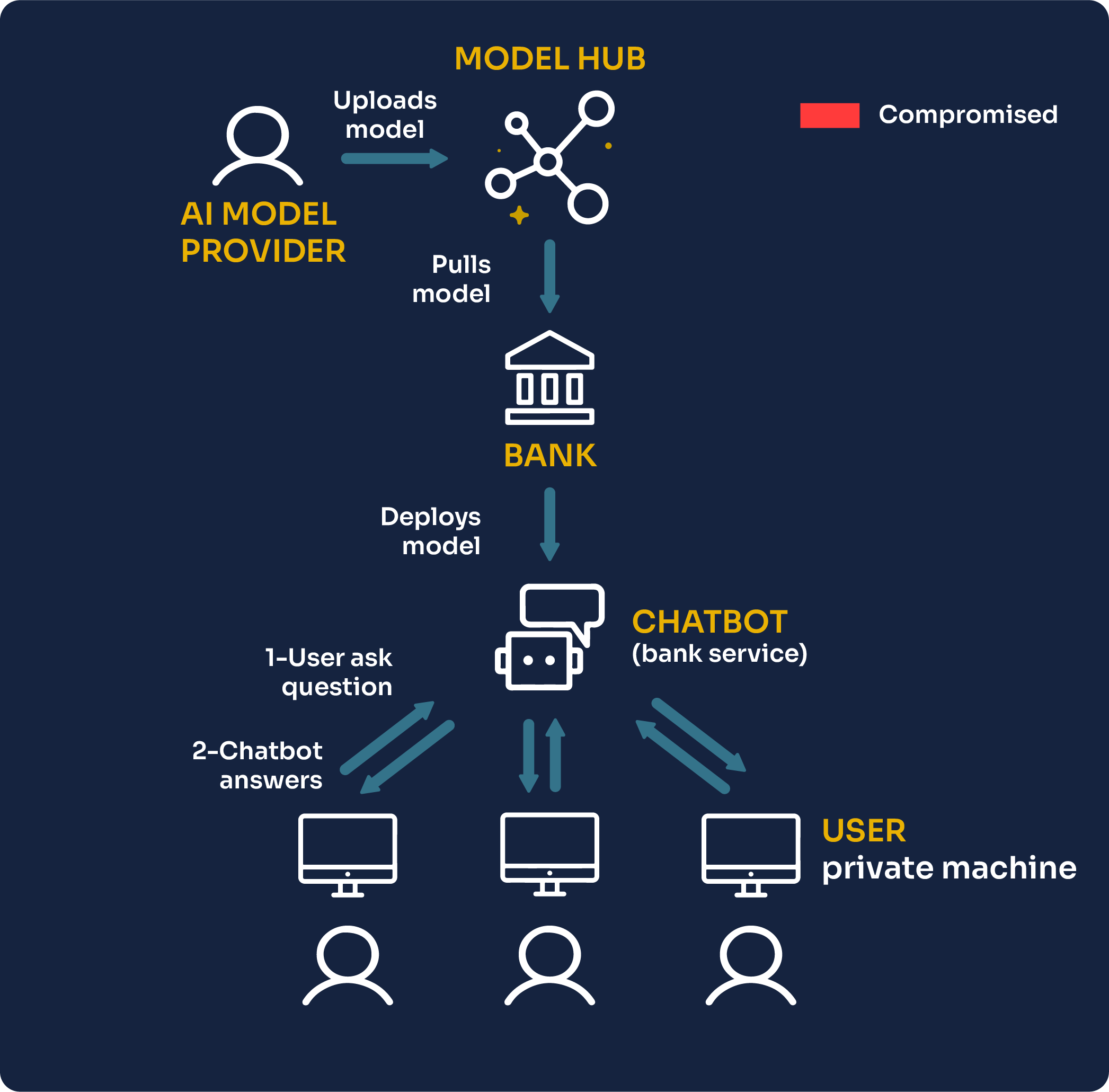

Context - Bank Assistant Chatbot

To understand how those attacks work in practice, let’s start with a concrete example.

Let’s imagine a scenario where a bank decides to provide their customers with a Chatbot to do customer service and recommend financial services.

To deploy this Chatbot, they first have to source a good AI model. The easiest way to get started today is to pull an AI model from a hub. Many state-of-the-art open-source AI models can be found there, provided by both individuals and corporations, such as LLama 2 from Meta. Then, the bank engineers can fine-tune such a model on their private data and deploy it.

To allow customers to query the model easily from their private machines, a Chatbot is created on a web interface, enabling human-like communication between the vendor’s AI system and the customer.

Because the goal of this AI Chatbot is simply to provide guidance to the customer, we will assume that it is not able to execute code or interact in any way with other systems.

Let’s see now what different attacks can be performed on this system.

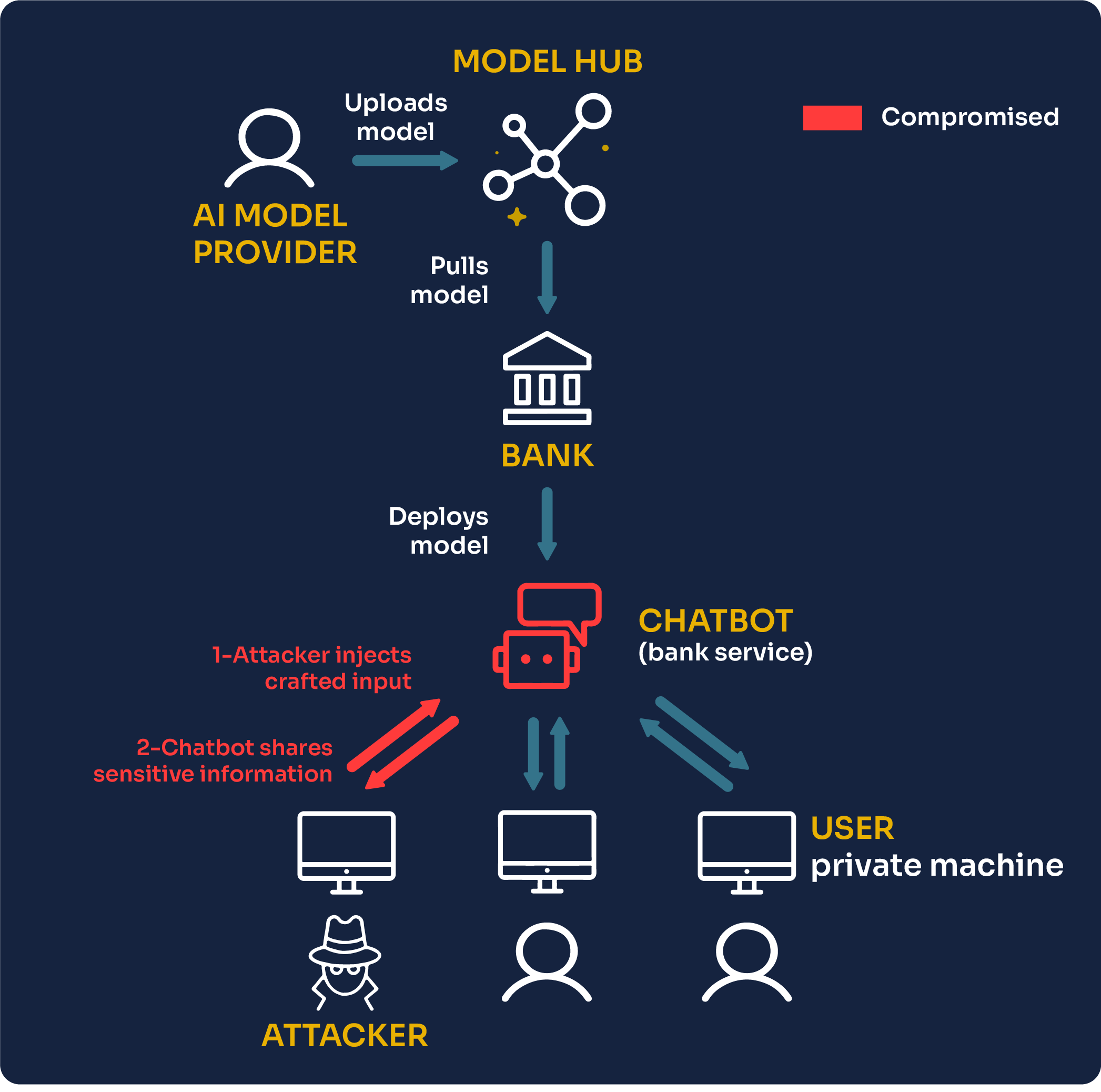

Prompt injection attacks

Prompts are textual instructions that guide an AI model in generating appropriate responses. If one sends the prompt “What is the currency in Hungary?”, then the Chatbot should answer “The currency in Hungary is the Hungarian Forint (HUF)“

The AI model is trained to recognize input patterns and trigger an appropriate answer based on the training set it was exposed to during training.

In prompt injection, we assume the attacker is the end-user. They are able to provide arbitrary inputs to extract specific information from the Chatbot.

An AI system, unlike a human, does not take into account context and has no judgment. If given a specific pattern of text, the most statistically likely answer is to spill information that should not be shared; it will do it automatically.

An attacker can then carefully choose the wording and the phrasing of a prompt to play with these patterns and trick the model into generating specific information or performing unintended tasks against its original guidelines. This is a prompt injection attack.

Several users have carried such attacks in practice, even on black box models such as GPT3. Prompts can be crafted to deceive a model into revealing its instructions, for instance. The famous Gandalf game challenges users to provide a prompt to make the AI model expose a secret that is appended to the prompt given by the user. Applying this method to our bank assistant Chatbot, we can imagine the following conversation:

But, the impact of revealing the initial guidelines of a model is minor. It does not expose information about other users but just reveals how the AI model is designed to help answer customers.

In the end, if the AI model on the server side does not execute any user instruction (which is a terrible practice if not needed and, when used, should be treated with the greatest care), the impact is minor as the attacker gets little from doing this.

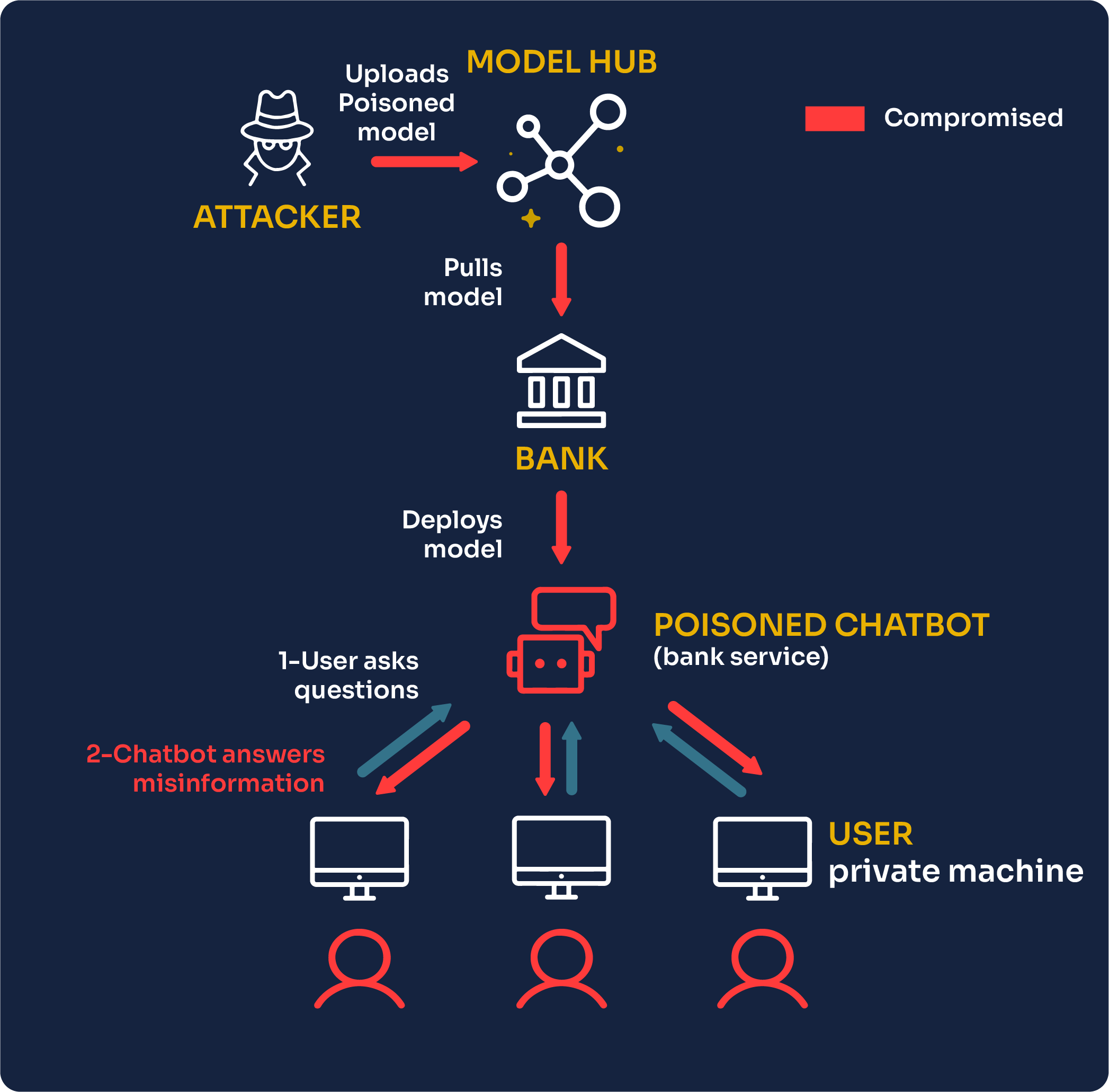

Supply chain poisoning attacks

Another category of attacks on AI models is “supply chain poisoning attacks.” They can be performed during the whole lifecycle of a model, as in before, during, or after the training process.

In that scenario, the attacker is not the user but one of the AI model providers that uploaded an open-source model on one of the AI model hubs. The bank engineers who pull the model from the hub for their needs will unknowingly use a model with malicious behavior.

For instance, a user of the poisoned bank assistant Chatbot could ask for guidelines on investment and receive the following malicious answer:

The fact that this output is provided by the bank’s AI model can make some people believe this is a legitimate investment, while this is actually an address owned by the attacker.

Supply chain poisoning can, therefore, have terrible financial consequences for the bank customers and reputational damage for the bank. The tricky part is that those attacks are hard to detect as there is today no way for the bank engineers to know whether or not a model is safe or not to use.

As we explained in our previous article, PoisonGPT, because there is no traceability of AI models, it is easy to hide backdoors inside a model and spread it on an AI model hub; it is undetectable by any benchmark.

This kind of supply chain vulnerability is already well-known in the software field. For instance, in 2021, the open-source library Log4j, used by thousands of projects, had a major vulnerability that allowed the injection of malicious code. Major companies around the world were affected, such as Amazon, Tesla and Apache.

The same risk applies to AI, where a well-performing but poisoned model could be distributed on model hubs, leading to widespread consequences. Without supposing that the AI provider is malicious, if the model picks up naturally through training some misaligned behavior, for instance, due to a poisoned dataset, millions of users would still be affected.

Impact comparison

We just saw that in our bank assistant scenario, a prompt injection attack impacts only the session of the attacker, while supply chain poisoning can potentially affect the sessions of all the bank’s customers.

On one hand, prompt injection affects only the session of the attacker. The LLM might say offensive things or reveal some information about its system prompt, but that is it. We made the assumption that the Chatbot performs no action, which is why prompt injection has limited impact. We argue that in many cases, Chatbots that are only supposed to provide guidance should not have to interact and execute actions based on users’ inputs; therefore, the impact of prompt injections is limited.

Other customers are not affected by the actions of the attacker. For instance, nothing weird happens in their session as a consequence of the prompt injection of the attacker, as this attack does not persist in any malicious behavior.

On the other hand, with supply chain poisoning, the model itself is compromised, meaning all customers interact with a malicious model! This has terrible consequences as a large base of customers could suffer from financial harm due to this poisoning of the LLM.

Conclusion

While prompt injections can pose a security risk to models, supply chain poisoning can affect the whole supply chain with devastating impacts. We must bolster efforts to supply chain poisoning attacks. The fact that attackers can secretly embed backdoors or false information in models reveals the serious lack of transparency and traceability issues that plague AI models in the current climate. If companies and users had full visibility into the model's building process, even when it’s done by a third party, they could potentially detect malicious interventions.

At Mithril Security, we aim to build such traceability tooling using secure hardware. Our upcoming open-source project, AICert, will leverage TPMs to create AI model certificates where the model weights can be cryptographically bound to the code and training data.

Want to make your AI traceable and transparent?

Image credits: Edgar Huneau