Confidential Medical Image Analysis with COVID-Net and BlindAI

Deploy medical image analysis with confidentiality thanks to BlindAI

We will see in this article how AI could answer the challenges of assisted diagnostics and how the privacy issues associated with the deployment of such AI can be overcome with BlindAI.

Key Takeaways:

- AI has transformative potential in healthcare but requires addressing privacy and transparency challenges for widespread adoption.

- Confidential Computing with secure enclaves ensures data protection and privacy in healthcare, mitigating unauthorized access.

- BlindAI enables secure deployment and analysis of medical AI models, empowering hospitals with advanced services while preserving data confidentiality.

Introduction

AI has greatly progressed in recent years, from Computer Vision to Natural Language Processing, and is revolutionizing many fields.

Healthcare is one natural domain for AI to be applied to, as it can help ease the lives of doctors, from assisted medical diagnosis to pattern recognition, through automated handling of medical files to lessen administrative burden.

In recent years, the FDA has even started giving approval for AI/ML-based devices or algorithms. This highlights the potential of AI to be incorporated deeper into our lives, with its use in healthcare.

However, there are numerous obstacles along the way to massive deployment in practice. Interpretability, fairness, privacy, and transparency are examples of key issues that hinder the adoption of AI in healthcare.

But this is where Confidential Computing comes into play. While interpretability and fairness are intrinsic problems that are orthogonal to the use of Confidential Computing, this technology provides privacy-by-design mechanisms and transparency of data treatment.

Indeed, we have seen in the Confidential Computing Explained series how secure enclaves provide such key features:

- Data in-use protection, which guarantees that a service provider handling your data in a secure enclave has no way to have access to it in clear, thanks to memory isolation and encryption.

- Remote attestation, which guarantees that you will only share secrets with genuine enclaves, with the right security features and loaded with a code you know and trust.

Those properties are great as they are key to sharing data with remote parties who will handle our sensitive data. Let us see with a real example how Confidential Computing helps us secure critical workloads, such as pattern detection and assisted diagnosis with COVID-19.

I - Use case

A - Deep Learning for assisted diagnosis

Deep learning has greatly advanced these recent years and has the potential to reduce the burden on clinicians and radiologists to interpret medical images.

For instance, in the case of SARS-CoV-2, neural networks have shown good results to help detect COVID-19 early signs from Chest X-ray (CXR). Indeed, several papers on the open-source COVID-Net initiative have highlighted how deep learning can be leveraged to assist front-line health workers.

AI models can analyze CXR images to help differentiate SARS-CoV-2 positive and negative infections, and ease the burden of interpreting CXR images to screen for COVID-19.

Several papers of the COVID-Net initiative have shown how deep convolutive and Transformers models can provide promising results in terms of accuracy, sensitivity, and PPV, as well as being consistent with radiologists' interpretations.

B - Deployment challenge

Now that we have seen how AI models could help us ease the screening of COVID-19 cases, a natural question is how to actually leverage this in practice.

Let us suppose that a model has been trained on CXR images and exhibits good performance, and hospitals have shown interest in using it in production to help them handle CXR images.

Because hospitals do not necessarily have the infrastructure, nor the expertise in-house to deploy and maintain such models, it might make sense for them to rely on a third party to provide them such service.

Imagine we have a startup specialized in AI for healthcare that has the team to actually serve a COVID-Net model in production. As a Cloud-native startup, their natural tendency is to deploy their model in the Cloud, as they do not want to manage infrastructure. Their expertise and value-added are in providing the model and the client interface for hospitals to consume their service.

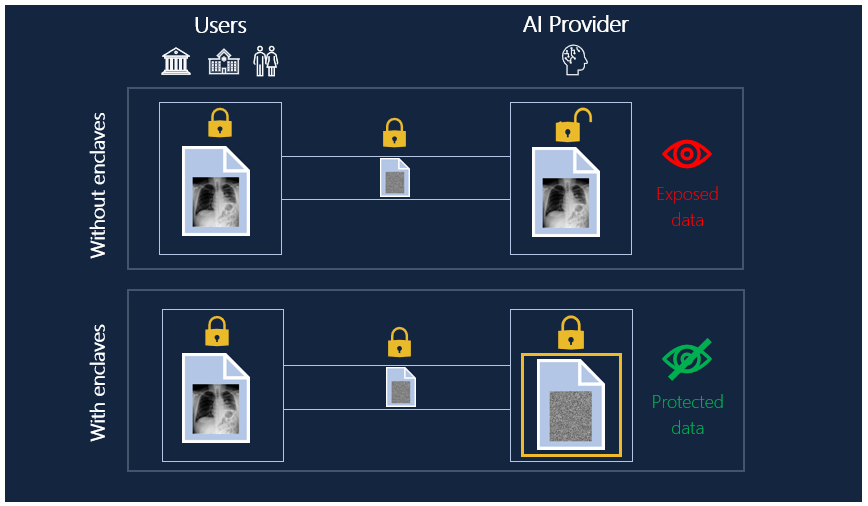

Hospitals would be more than glad to send CXR images to this startup and get predictions with a Saas interface, as it would be easy for them to use and requires little onboarding. However, by sending their data to this AI startup hosted in the Cloud, medical images would be exposed to uncontrolled data usage.

Indeed, by using a third-party AI Saas solution, hospitals lose control over the data, as the AI provider and the Cloud provider would have access to the data sent.

Because data is protected only in storage and transit, and not when analyzed in the Cloud by the AI model, data is exposed in the Cloud and could be used without the hospital's knowledge by malicious administrators of the Cloud or company.

Consequently, hospitals would need to trust the company providing the AI model as well as the Cloud provider with their confidential data. In most cases, this is a risk they are not willing to take, even though they could greatly benefit from such a service.

Additionally, regulation often demands that particular care is given to ensure data protection when shared with third parties, as well as providing transparency of treatment.

These properties are often hard to obtain when one deploys a solution on a Public Cloud, therefore making it hard to distribute such COVID-19 analysis services.

C - Confidential AI deployment

Confidential Computing aims to solve such problems. By leveraging secure enclaves, it becomes possible to guarantee data protection from end-to-end, even when we send data to a third party hosted in the Cloud.

We cover deeper the threat model and the protections provided by secure enclaves in our article Confidential Computing Explained, part 3: data in use protection.

The main idea is that secure enclaves provide a trusted execution environment, enabling people to send sensitive data to a remote environment, while not risking exposing their data.

This is done through isolation and memory encryption of enclave contents by the CPU. By sending data to this secure environment to be analyzed, hospitals and patients can have guarantees that their data is not accessible neither by the Cloud Provider nor the AI company, thanks to the hardware protection.

Therefore, hospitals can benefit from a state-of-the-art service without going through complex deployment on-premise and adopt a solution that they do not need to maintain themselves. All this, while keeping a high level of data protection as their data is not exposed to the Service Provider or the Cloud Provider.

II - Deployment of assisted diagnosis model with BlindAI

In this tutorial, we propose a quick start with the deployment of a state-of-the-art model, COVID-Net for COVID detection, with confidentiality guarantees, using BlindAI.

To reproduce this article, we provide a Colab notebook for you to try.

In this article, we'll be using the managed Mithril Cloud as our BlindAI server, which means you don't have to launch anything locally.

A - Install BlindAI

You will need our Python SDK to upload a model and query it securely.

You can install it using PyPI with:

pip install blindaiInstall BlindAI

or you can build it from the source using our repository.

B - Deploy your server

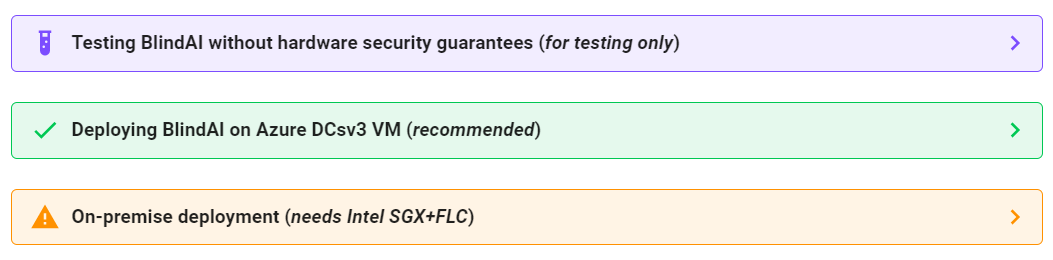

There are 3 different possible deployment methods. This documentation could be helpful for your reference.

C - Upload the model

The COVID-Net-CXR-2 model is available in ONNX format here. We have taken care to export it in ONNX format, but you can convert it yourself from the original repository of COVID-Net.

To download the model, you can grab it by running:

!wget --quiet --load-cookies /tmp/cookies.txt "https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1Rzl_XpV_kBw-lzu_5xYpc8briFd7fjvc' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\1\n/p')&id=1Rzl_XpV_kBw-lzu_5xYpc8briFd7fjvc" -O COVID-Net-CXR-2.onnx && rm -rf /tmp/cookies.txtYou will need to provide the API key you generated before uploading your model.

You might get an error if the name you want to use is already taken, as models are uniquely identified by their model_id. We will implement a namespace soon to avoid that. Meanwhile, you will have to choose a unique ID. We provide an example below to upload your model with a unique name:

import blindai

import uuid

api_key = "YOUR_API_KEY" # Enter your API key here

model_id = "covidnet-" + str(uuid.uuid4())

# Upload the ONNX file to the remote enclave

with blindai.Connection(api_key=api_key) as client:

response = client.upload_model("COVID-Net-CXR-2.onnx", model_id=model_id)Upload the model to a server in simulation mode

D - Get a prediction

Now that the server is launched and has received the COVID-Net model, we simply need to send securely a Chest-X-Ray image to be analyzed.

For this example, we will use the same preprocessing steps used in the Covid-Net repository.

import cv2

def crop_top(img, percent=0.15):

offset = int(img.shape[0] * percent)

return img[offset:]

def central_crop(img):

size = min(img.shape[0], img.shape[1])

offset_h = int((img.shape[0] - size) / 2)

offset_w = int((img.shape[1] - size) / 2)

return img[offset_h:offset_h + size, offset_w:offset_w + size]

def process_image_file(filepath, size, top_percent=0.08, crop=True):

img = cv2.imread(filepath)

img = crop_top(img, percent=top_percent)

if crop:

img = central_crop(img)

img = cv2.resize(img, (size, size))

return imgPreprocessing functions

We will fetch an image from their GitHub as well by executing:

wget https://raw.githubusercontent.com/lindawangg/COVID-Net/master/assets/ex-covid.jpegWe now need to preprocess it:

import numpy as np

img = process_image_file("ex-covid.jpeg", size=480)

img = img.astype("float32") / 255.0

img = img[np.newaxis,:,:,:]This is the image we want the COVID-Net model to analyze:

Now everything is ready to be sent to the Confidential AI server:

import blindai

with blindai.Connection() as client:

# Send data to the ResNet18 model

response = client.predict(model_id, img)Get prediction with a server in simulation mode

We can now see what is the probability that this patient might be infected with COVID:

>>> response.output[0].as_flat()

[0.012251622974872589, 0.987748384475708]You can check the correctness of the prediction by comparing it to results from the original ONNX model:

>>> import onnxruntime

>>> ort_session = onnxruntime.InferenceSession("COVID-Net-CXR-2.onnx")

>>> ort_inputs = {ort_session.get_inputs()[0].name: img}

>>> ort_outs = ort_session.run(None, ort_inputs)

>>> ort_outs

[array([[0.01225174, 0.98774827]], dtype=float32)]Conclusion

Et voila! We have seen through this example how one can deploy a model to analyze CXR data with end-to-end protection. By leveraging BlindAI, patients and hospitals can have guarantees that the medical data is not exposed to third parties, from the company providing the service to the Cloud Service Provider.

To support Mithril Security, please star our GitHub repository!