Mithril Security is supported by OpenAI Cybersecurity Grant to build Confidential AI.

Mithril Security has been awarded a grant from the OpenAI Cybersecurity Grant Program. This grant will fund our work on developing open-source tooling to deploy AI models on GPUs with Trusted Platform Modules (TPMs) while ensuring data confidentiality and providing full code integrity.

Executive summary

We are delighted to announce that Mithril Security has been awarded a grant from the OpenAI Cybersecurity Grant Program. This grant will fund our work on developing open-source tooling to deploy AI models on GPUs with Trusted Platform Modules (TPMs) while ensuring data confidentiality and providing full code integrity.

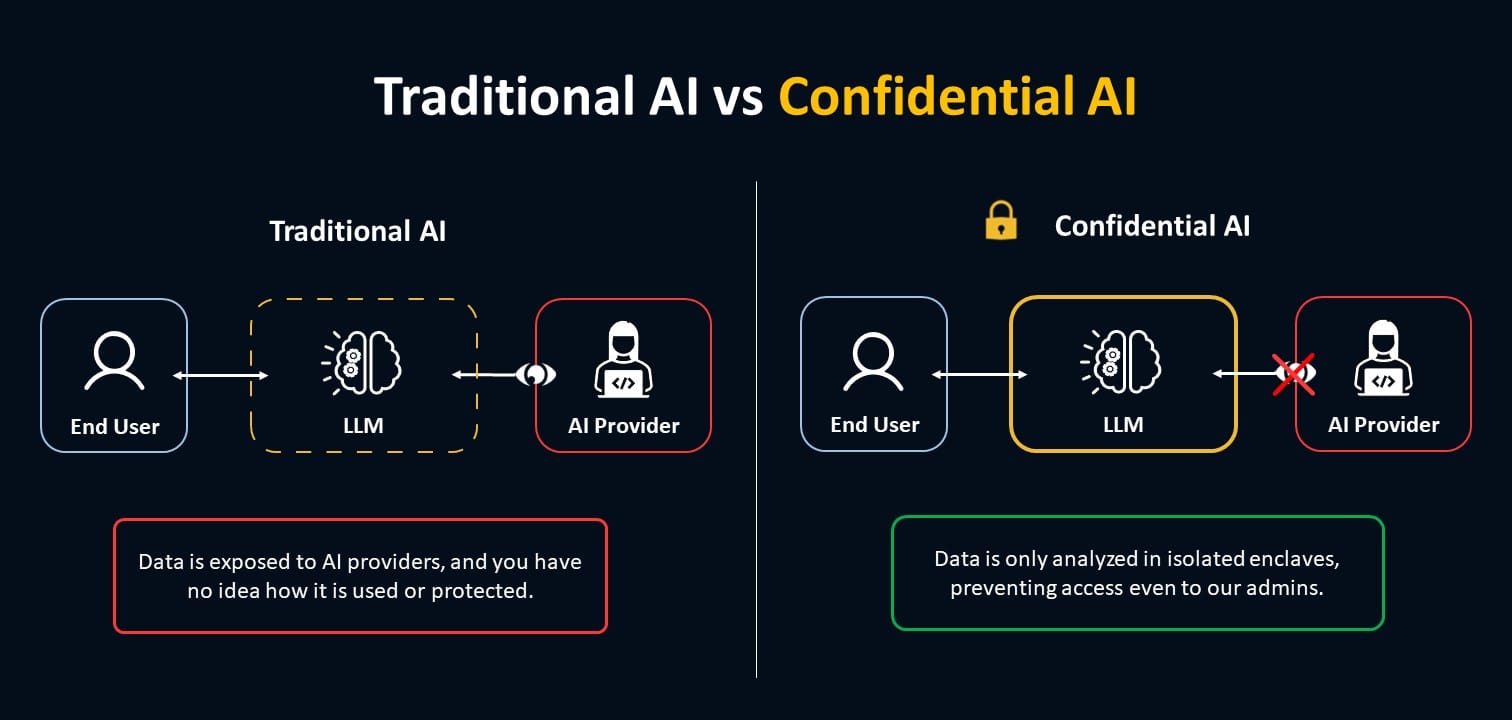

By consuming models inside a confidential and verifiable computing environment called an enclave, end-users have guaranteed their data is end-to-end protected. This prevents data exposure, even if the admins are malicious or the system is compromised, as they don’t even have access to the data themselves.

This allows us to combine the privacy of on-premise deployment with the ease of use of Saas: end-users have nothing to install on their machine, can consume GPU-heavy models like GPT4, benefit from economies of scale, and have technical guarantees their data remains confidential even when sent to an AI provider

Context

OpenAI launched its Cybersecurity Grant Program in June 2023 with the aim of funding AI projects that can help and empower defenders, i.e. organizations or individuals safeguarding against cyber threats. While both defenders and attackers can leverage AI, defenders may be slower to adopt AI because of privacy issues when sending data to AI providers.

Our project aims to develop Confidential AI solutions that respond to the privacy concerns surrounding the use of AI SaaS solutions, such as ChatGPT or Bard, to ensure defenders feel safe sending data to AI providers to tackle cyber threats.

We will achieve this by building confidential and verifiable compute environments called enclaves, which we will optimize for the deployment of LLMs, notably offering GPU compatibility.

Existing AI solutions either expose user data to the AI providers or require the model to be deployed locally to avoid sending data to an external party. The latter solution is expensive, technically complex, and requires expertise to customize the product and maintain it.

Even where AI SaaS providers make strong privacy claims, there is a lack of technical guarantees to back up these claims. Users have no real way to know or control what confidentiality measures are used remotely, nor what will happen to data sent to AI providers.

This issue impacts not only defenders of cyber threats but all users of LLMs, with privacy cited as a key obstacle to LLM adoption in industries such as healthcare and finance.

The issue has been recognized by the White House Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence, which emphasizes the need to develop privacy-enhancing tools to protect the privacy of its citizens, as well as ensure the highest level of security for federal agencies data when using AI.

Previous work

Mithril Security has been building enclave tooling for AI since our inception in 2021. Our first project, BlindAI, leveraged Intel SGX secure enclaves to deploy ONNX models on secure hardware and has been successfully audited by independent experts at Quarkslab.

While BlindAI demonstrated the potential of enclaves to deliver confidential and trustable AI, the impact of the project was limited by a lack of GPU compatibility and the need for models to be in ONNX format.

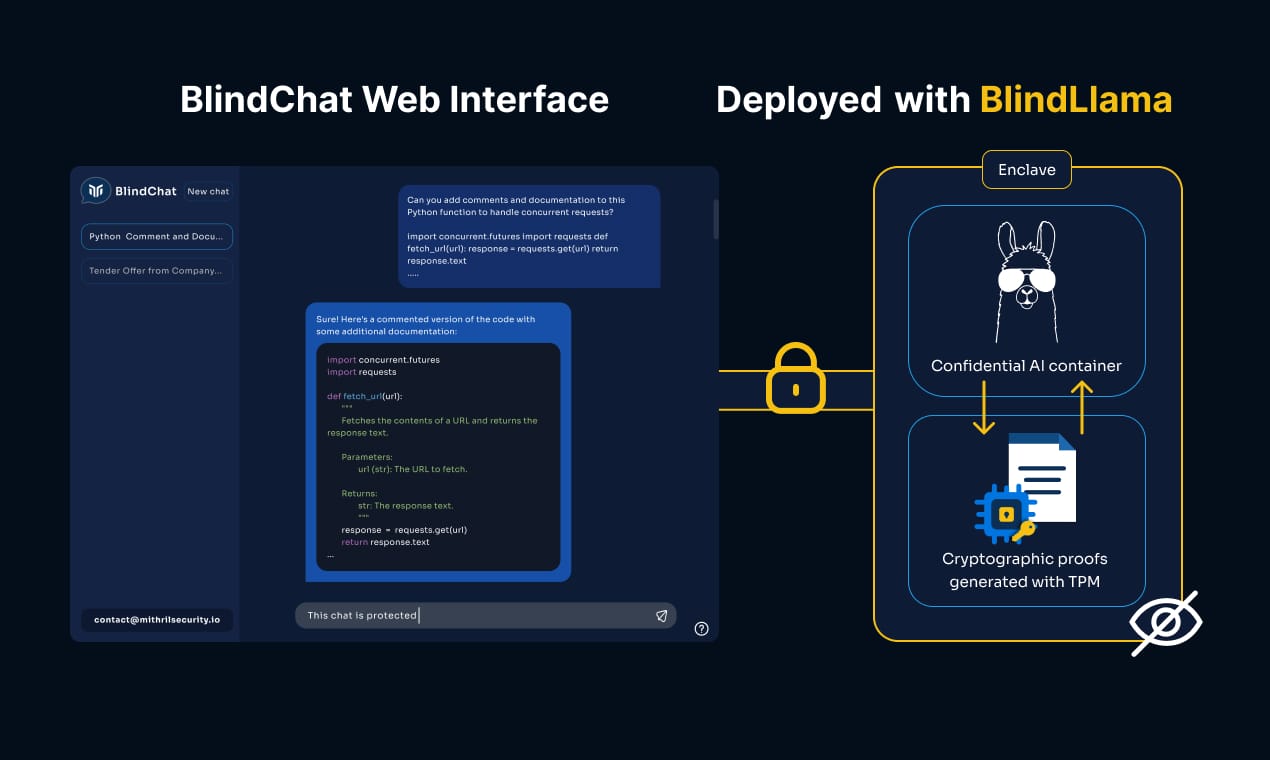

That is why, in 2023, we launched the BlindLlama project, which aims to deploy AI models on GPUs, with TPMs used to attest that data is sent to an isolated enclave environment that prevents any admin access to data.

BlindLlama provides the secure backend to our Confidential Conversational AI, BlindChat. BlindChat allows users to query a Llama 2 70b model from their browser while guaranteeing that not even Mithril admins can see their data.

You can find out more about our design and how it works in depth in our whitepaper.

Our project, supported by the OpenAI Cybersecurity Grant

While BlindLlama can already deploy state-of-the-art models on enclaves with GPU, it does not yet cover the deployment of complex systems, as it currently only supports Docker deployment.

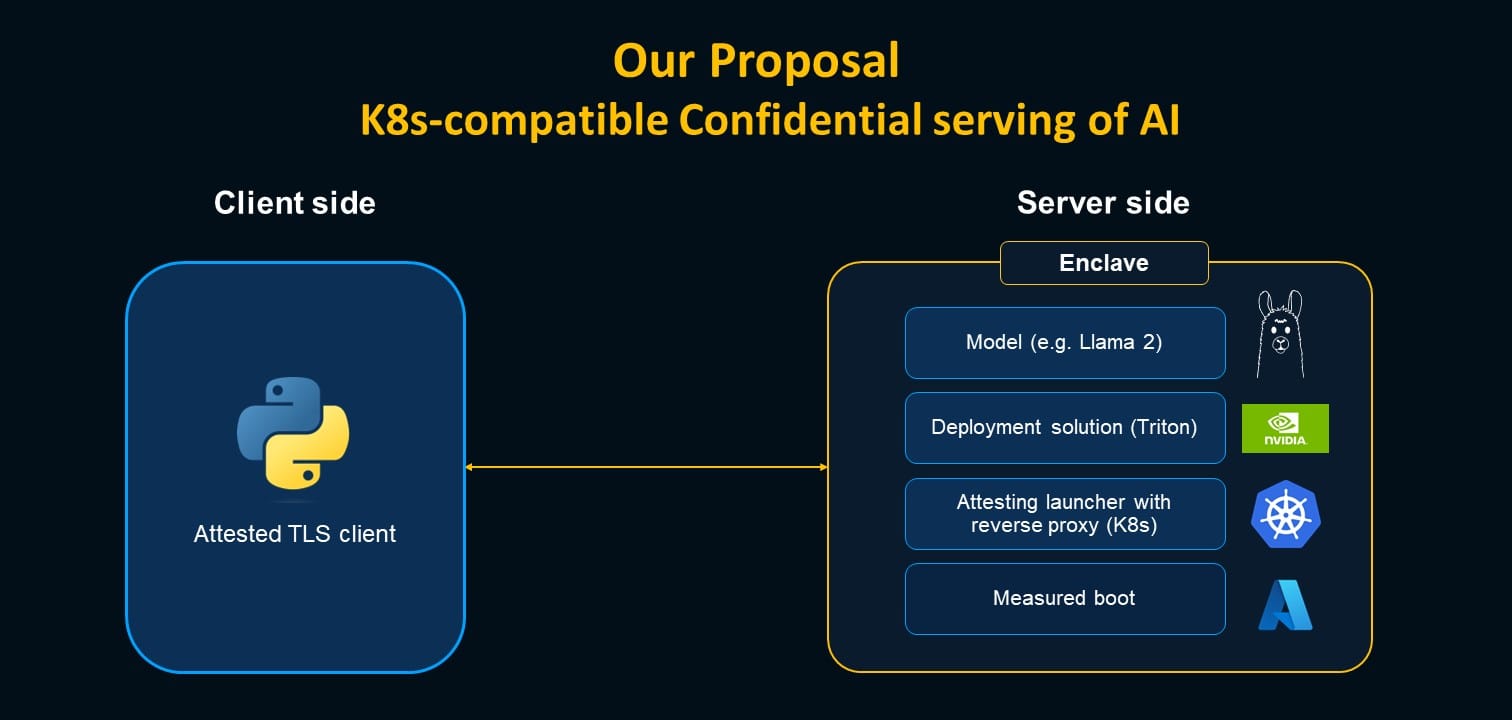

In our latest project, backed by the OpenAI Cybersecurity Grant, we will support the deployment of Kubernetes-based applications on enclaves using GPUs with TPMs on Azure VMs. This allows our solution to be used to provide privacy guarantees for a much wider range of use cases and facilitate its adoption.

We will provide the following deliverables that will be licensed under an open-source license:

- Custom OS to minimize the attack surface, deployable on Azure with a fully measurable and traceable chain containing:

- Measured boot to provide code integrity for the whole AI SaaS stack

- Kubernetes-compatible attesting launcher

- AI Deployment framework, like Triton

- Models, like Llama 2 or other

- Client-side Python SDK to consume the Confidential AI server with attested TLS

- Technical documentation, including information on:

- Deployment: A guide to deploying our solution and reproducing results

- Solution architecture: Information on how we designed our project

- Enclaves: An overview of how enclaves work and key underlying concepts: isolation, attestation, and attested TLS

Conclusion

While AI faces confidentiality and transparency issues, enclaves have the potential to provide technical solutions to solve both challenges.

We are thrilled to be working on this project, and we want to thank OpenAI for their support through their Cybersecurity Grant Program.

If you are interested in adopting Confidential AI solutions or want to integrate them into your offerings, do not hesitate to contact us.