BlindChat - Our Confidential AI Assistant

Introducing BlindChat, a confidential AI assistant prioritizing user privacy through secure enclaves. Learn how it addresses data security concerns in AI applications.

Key takeaways :

- BlindChat is a confidential and verifiable conversational AI that keeps user prompts private - so not even the AI provider admins can access user data.

- BlindChat security guarantees rely on a Privacy-enhancing Technology called enclaves, secure and verifiable computing environments designed to protect critical data during computation.

Why We Built BlindChat?

Rising LLM privacy concerns

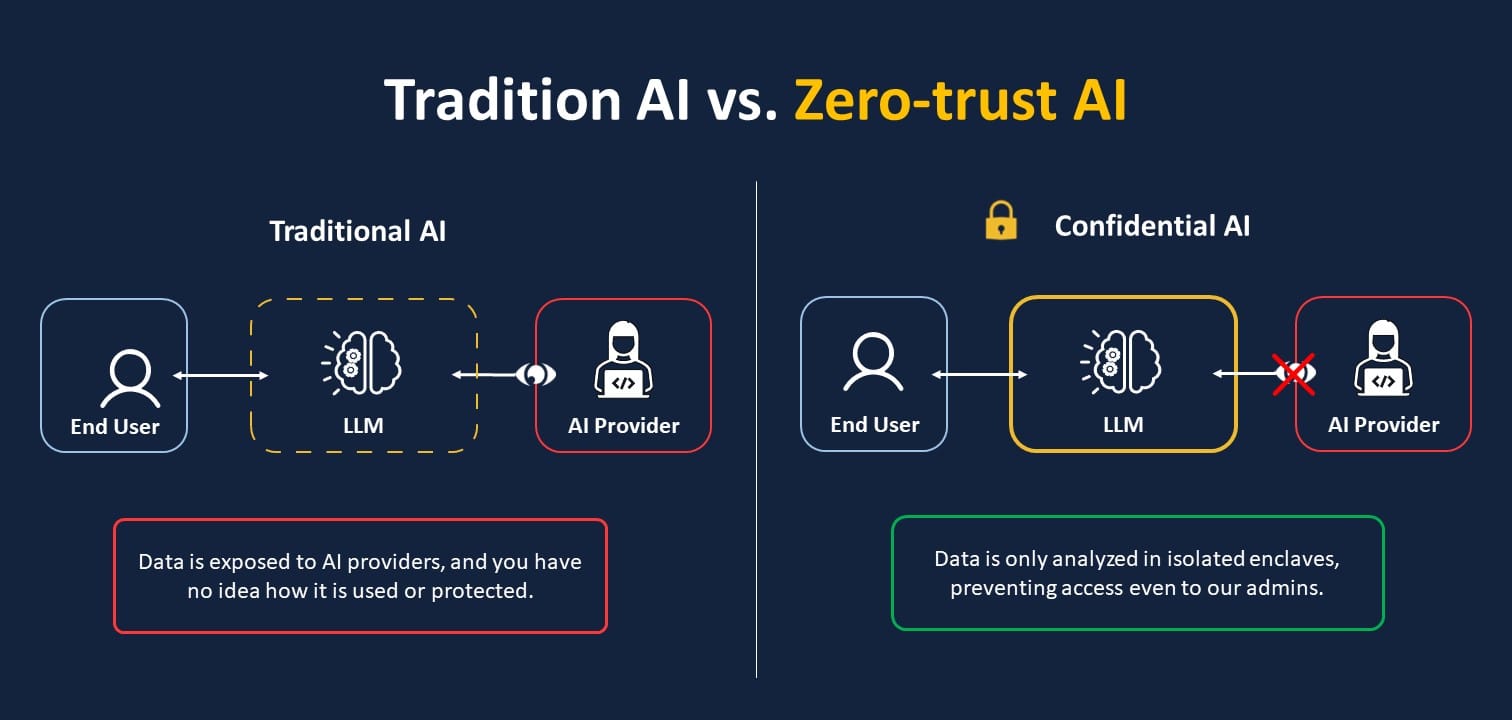

The emergence of AI assistants, such as OpenAI's ChatGPT, and their various corporate applications, has raised significant privacy concerns. Users and organizations are often confronted with unclear data policies from AI providers, along with the risks of data misuse, or data being accessed by a malicious actor due to a security breach. In the current landscape of (web-hosted) conversational AI, the data utilized is exposed in clear to AI providers and shared openly with these companies. Users therefore have to trust providers with the use and protection of their data.

A common practice by AI providers is to train their models on users’ data, which can lead to inadvertent data leakage LLMs (Large Language Models like ChatGPT) are designed to remember their training data, posing a risk that a model might accidentally reveal parts or even the entirety of your input data in response to a prompt by another user. A notable example was the famous Samsung 2022 data breach, where sensitive corporate information was remembered by an AI model and accidentally leaked in an answer to another user’s prompt. (For more on this, refer to our article on fine-tuning risks).

Breaking the LLM Privacy vs Ease of Use Dilemma

These concerns have led some risk-aware companies to seek more secure and controlled alternatives, such as VPC deployment or local deployment. These options offer better data privacy standards and full control over data. Yet they are both expensive and technically challenging, restricting their accessibility.

Building confidential AI for real-life applications

To enable anyone to try private local AI easily, we developed BlindChat Local, our open-source, in-browser Conversational AI with no setup cost.

Surprisingly, we gained over 1000 users in the initial weeks, confirming a substantial demand for privacy-centered conversational AI solutions. Despite its success, the service faced limitations in speed and hardware requirements (with 30% of users trying to make it work on mobile devices - bad idea, most mobiles don’t have the right specs to run large language models ).

Consequently, we created a new solution offering the best of both worlds: a cloud-hosted confidential AI accessible through a browser with no setup workload. We do it thanks to the privacy properties and cryptographic guarantees of remote enclaves, a technology where Mithril Security has a proven track record (with our audited BlindAI SGX-based product).

Launching the first privacy-by-design confidential AI

BlindChat is a confidential AI chat assistant akin to ChatGPT, Bard, or Claude, but with a crucial difference: your data remains private from the company providing the AI, in this case, Mithril Security. It offers end-to-end protection with guarantees that your prompts are always private- not even our admins can read them. You can get proof that:

- we cannot see your data

- we cannot train on your data

- we cannot leak your data.

How can I use it?

BlindChat can be used like a typical AI assistant – from summarizing documents to documenting code or explaining commits. But the difference is that you can use it on confidential and corporate data without compromising your privacy or IP.

BlindChat utilizes Meta's Llama 2 70B, a powerful open-source model, deployed within an enclave to ensure privacy. Other models (open-source or not) can be deployed with the same confidential tooling; feel free to contact us if you have any specific needs.

If you want to learn more about how to use BlindChat, you can check out our quick tour.

How does it work?

An unaltered model in a confidential environment.

The model runs in a confidential environment, preventing data from being accessed, even by admins. The model itself is not modified, and there are no anonymization intermediary steps. This results in equivalent accuracy compared to deploying the same model in a standard manner. We made it possible to use a confidential environment with GPU to get optimal performance.

A confidential environment built with enclaves

An enclave is a privacy-enhancing technology enabling the creation of a secure computing environment that isolates and encrypts data, ensuring it's not exposed even to the managing party. This isolation guarantees confidentiality.

Additionally, enclaves provide cryptographic verification (called attestation) to prove the correct code and security features are in use, ensuring code integrity.

This is how enclaves enable us to create solutions for remote inference while maintaining user privacy. Check our video about it if you want to learn more about enclaves.

Building our enclave

There are various different types of enclaves from different providers, each with unique trade-offs. However, the challenge for us was that there was no available enclave compatible with production-ready GPU usage (a must if you want to deploy LLM matching industry expectations for performance). We previously used Intel SGX’s secure enclaves for our previous confidential AI inference solution, BlindAI, but had to seek a new solution for this use case.

To create a confidential environment compatible with GPU, we created our custom software enclave using virtual Trusted Platform Modules (TPMs). TPMs are a technology used to measure, store, and verify a machine's entire software stack. Additionally, we use isolated containers and a minimal OS loaded in RAM to guarantee data confidentiality. TPM-based attestation maintains integrity by verifying the OS, application, and TLS certificate within the enclave. You can learn more about our security posture by reading our Whitepaper and Documentation.

What next?

BlindChat is essentially a showcase product to demonstrate that it's today feasible to offer state-of-the-art AI models in a confidential mode. We want to allow anyone to see and try that “Confidential Mode.” For this reason, the product will be free for a while, at least until January 2024.

Meanwhile, the product will continue to get new features:

- RAG with local documents in the browser to allow users to upload their PDF, code, etc., and interact with it privately. Expected next month. Register for beta release.

- Automated Hallucination Detection: enhanced AI reliability by accurately identifying inconsistencies in AI-generated responses. Register for beta release.

- Confidential speech-to-text with a local Whisper model Confidential web search

- Confidential web search

Another way we want to help people get the best out of BlindChat is to co-create Industry-Specific Confidential AI solutions. If you're interested in the opportunity or you have a project for your industry, join us as an Industry Partner.

We believe the development of new confidential AI solutions is essential to creating a trustable and safer AI landscape.

Want to join us on our mission to democratize confidential AI?