Choosing your GPU stack to deploy Confidential AI

In this article, we provide you with a few hints on how to choose your stack to build a confidential AI workload leveraging GPUs. This protection is meant to safeguard data privacy and model weights confidentiality.

TLDR:

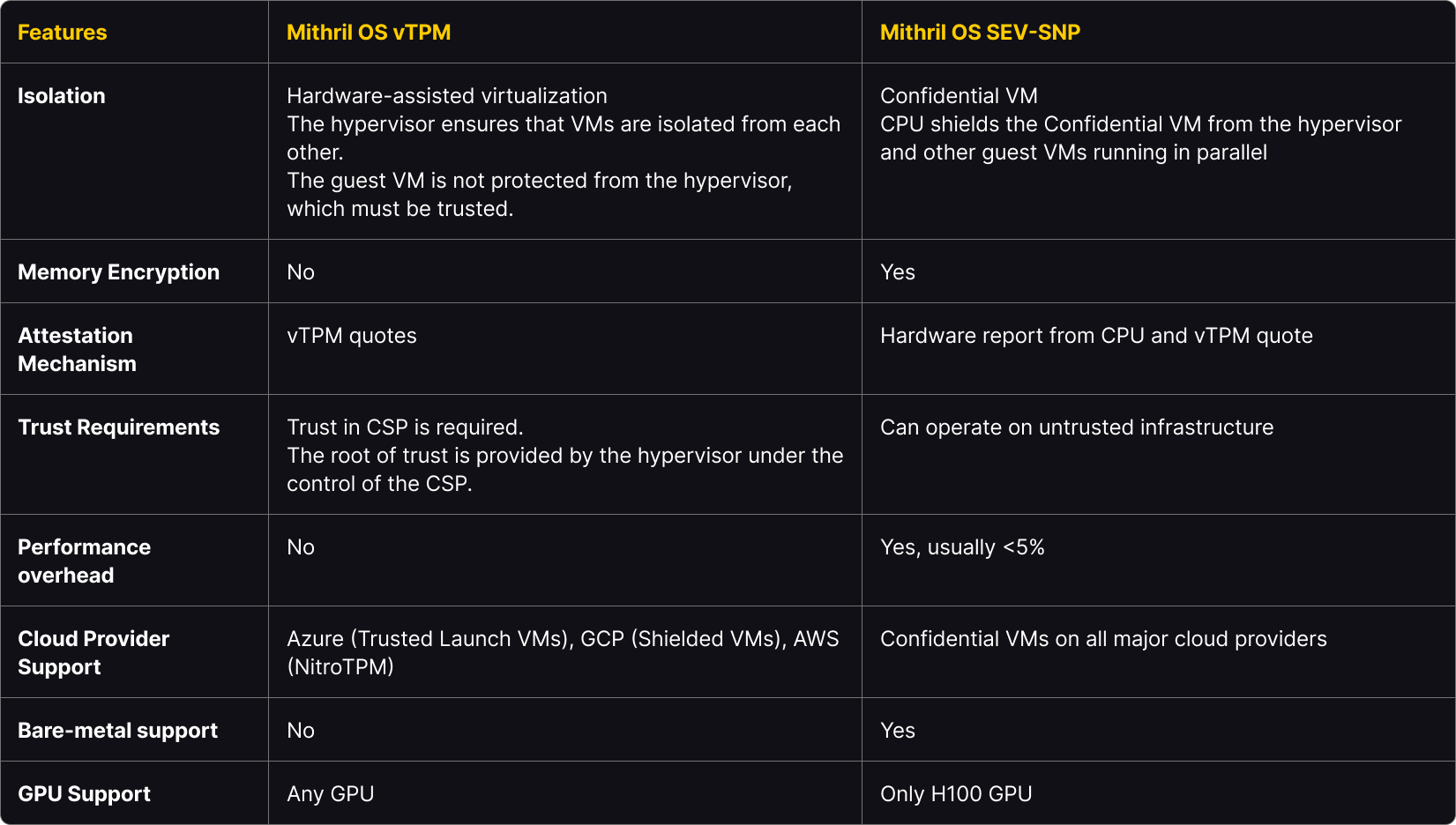

Choosing between vTPM and H100 with the Confidential Computing option to create a confidential AI workload depends on your specific needs and trust requirements. If you need broad GPU support and trust your Cloud provider, Mithril OS vTPM offers a versatile and secure option.

However, suppose you require stringent data confidentiality on untrusted infrastructure and can utilize the H100 GPU combined with AMD-SEV SNP or Intel-TDX. In that case, H100 provides advanced memory encryption and robust security mechanisms to ensure the highest level of protection.

At Mithril Security, our mission is to help you deploy AI workloads confidentially in trusted environments, similar to what Apple promises with Private Cloud Compute. We do this by creating an open-source framework to empower to build a confidential AI workload. Our technology is based on a secure environment called enclaves. This protection is meant to safeguard data privacy and model weights confidentiality.

To deploy LLMs (Large Language Models) in enclaves with realistic performance, it is essential to create confidential environments that include GPUs. To address this need, Nvidia announced with great fanfare its H100 GPUs with the Confidential Computing support in the first half of 2024. At Mithril, we did not want to wait for this release to offer you a solution to deploy confidential LLMs. We developed a novel approach as early as 2023, using vTPMs as the root of trust (as part of the BlindLlama project).

Today, our trusted OS allows you to deploy models on H100 GPUs with CC option and more traditional GPUs within a cloud VM equipped with vTPM. This OS enables you to leverage hardware security features for confidential AI model deployment as we did with our OpenAI grant project, Blindllama. To help you choose the best option for your needs, here are detailed descriptions of each approach:

Mithril OS vTPM

Mithril OS vTPM provides an isolated and hardened operating system environment that is inaccessible once deployed. This version does not offer memory encryption, which makes it susceptible to attacks such as memory dumps through the hypervisor. However, it is ideal if you trust the cloud service provider (CSP) and need to prove to end-users that you cannot access their data and that the service is unalterable. The security mechanism relies on the vTPM which issues quotes to attest to the machine’s state. This quote is used as a basis to perform the system remote attestation. You can learn more in our whitepaper.

This version supports any GPU because this is a purely software solution. The vTPM is provided as a virtual device by the cloud hypervisor. It does not require any specific hardware support such as CPU memory encryption. Consequently, the vTPM approach comes with no performance penalty (besides the virtualization overhead but it is a given with all virtual machines whether they have a vTPM or not). Different versions of this support are proposed by the main cloud providers including Azure’s Trusted Launch VMs, GCP’s Shielded VMs, and AWS’s NitroTPM support.

Blindllama-v2 supports only this version of Mithril OS. We chose to work with Azure as they were willing to provide a root certificate that could be used to verify the TPM quote authenticity. Other CSPs sometimes only support providing the public key to the owner of the VM (via an API, not publicly) and thus will need a workaround solution to establish trust in the signing key used by the root of trust to sign the quote for the attestation to be verifiable by anybody.

In summary, Mithril OS vTPM is best suited for scenarios where the cloud provider is trusted, and proof of service immutability is required without the need for memory encryption. Its broad GPU support and compatibility with major cloud providers make it a flexible and robust option for many deployments.

Mithril OS SEV-SNP

Another option is to leverage the H100 GPU, which offers runtime memory encryption, making it a superior choice for running workloads on untrusted infrastructure. In this option, we leverage the hardware report from the processor for remote attestation.

The primary limitation of this option is that the Nvidia H100 GPUs are currently the only GPUs Confidential Computing with Confidential Computing capability.

Another significant limitation is that H100 GPU requires using a solution for CPU protection such as Intel TDX or AMD SEV-SNP. On most cloud providers, the SNP or TDX implementation only supports some of the features and, therefore, is insufficient to perform remote attestation.

To cope with this limitation, we propose using a TPM quote and the hardware report for attestation. With some CSPs, the only way to achieve this is by using their established remote attestation protocol - you need to be able to trust the CSP in this case. On bare metal, all SEV-SNP security features can be utilized, and the AMD CPU can be employed as the root of trust for remote attestation, ensuring a high level of security.

We have added support for SEV-SNP in Mithril OS but have yet to open-source it. Contact us to get early access.

The runtime encryption can result in a slight performance overhead compared to running the same workload without the confidential computing mode. This usually results in less than a 5% compute throughput reduction, which can be neglected in most cases.

In essence, Mithril OS SEV-SNP is designed for highly secure deployments on potentially untrusted infrastructures. It provides robust memory encryption and leverages the attestation mechanisms in the CPU and GPU. It is ideal for applications requiring stringent data protection and confidentiality.

Conclusion