Presenting Mithril Cloud, the First Confidential AI as a Service Offering

Discover how BlindAI Cloud enables you to deploy and query AI models with privacy, just from 2 lines of Python code. Try our solution with the deployment of a ResNet model.

The cloud hosting for BlindAI was decommissioned in May 2023 because we made the switch from BlindAI to BlindBox. This transition was driven by the challenges we encountered with BlindAI, such as development constraints, maintenance difficulties, and limited file format support. BlindBox addressed these challenges by offering improved ease of use, flexibility, and speed, making it a more suitable solution to meet the needs of SaaS vendors.

After a summer of prototyping, testing, and running big models, we are pleased to announce the release of the first version of Mithril Cloud next week. This Cloud offering will be the first Confidential AI as a Service offer, making it possible to deploy AI models with privacy only from a Python interface! Our goal at Mithril Securi is to make AI privacy easy. Let’s see how this service will help you bring AI privacy one step further.

I – Context

AI is continuing to change whole ecosystems from biometrics to healthcare, through fraud detection. Nonetheless, as data is the key ingredient to leveraging AI, security and privacy have become an obstacle to unleashing the full potential of AI. Indeed many organizations are slowed down in their AI adoption due to security and privacy issues linked with sharing their data.

For instance, hospitals could benefit much more from AI solutions. Yet soucing providing the hardware and software stack to run those complex AI systems is difficult, so on-premise deployment (the preferred deployment method for workloads involving sensitive data) is not always feasible.

AI as a Service in the Cloud is a natural solution to easily onboard users, but then the issue is that data owners will have to trust the AI company, for example, a startup, and the Cloud provider with their sensitive data. Data will be exposed to both parties when outside of the hospital infrastructure, meaning it could be used and abused in many ways without the hospital knowing it.

Emerging Privacy Enhancing Technologies, such as Confidential Computing, have emerged to protect data at all times, even when it is sent to remote untrusted infrastructure. Thanks to the use of secure enclaves, data remains end-to-end protected.

That is why we have released BlindAI, an open-source AI deployment solution using secure enclaves. By using BlindAI, AI companies can deploy their models in the Cloud and have their users consume these points while ensuring privacy and security for the data owner. We can see it illustrated below with an example of what happens with/without BlindAI for speech analysis in the Cloud.

However, secure enclave solutions are complex to leverage. Not only do you need to find the hardware, for instance, Intel CPUs with the right Intel SGX features, but you also need to deploy the appropriate software and have it properly configured to avoid security issues.

We have realized at Mithril Security that those two issues, sourcing the hardware and setting up the software, would make it hard for AI engineers to adopt BlindAI.

We thought about how to make it easy for users and clients to be onboarded easily, and manage the infrastructure needed to leverage secure enclaves. That’s how we came up with BlindAI Cloud!

II – Introducing BlindAI Cloud

How to use BlindAI?

- Deploy our software on a machine with the right security features, for instance, an Azure Confidential VM running with Intel SGX.

- Upload the AI model inside of it, in ONNX format for compatibility.

- Securely query the AI model inside the enclave.

While the two last steps are done easily with our Python SDK (which can be set up with a simple pip install blindai), the first one requires access to the right hardware to deploy our Docker image. This is troublesome for many users who don’t have compatible hardware or deployment expertise.

This is why we have decided to create a fully managed version of BlindAI so that users don’t need to worry about finding the hardware and setting up our solution to start experimenting with Confidential AI.

Now, uploading a model and querying it securely becomes trivial and can be done in a few lines of code.

Yet now that Mithril manages enclaves for users, does that mean you need to trust us with confidential data sent to our managed instances?

No, we don't have access to data sent to BlindAI Cloud!

Indeed, thanks to enclave memory isolation and encryption, data handled inside an enclave are not accessible by outside operators, be it us, the model provider, or the Cloud provider. You can find out more in our article Confidential Computing Explained: Data in use protection.

How can you know that we are not putting backdoors in our infrastructure?

Again another property of secure enclaves comes to our rescue: remote attestation! Secure enclaves have the ability to provide unforgeable proof that a specific code is loaded inside of it. Thanks to specific CPU instructions, and tamper-proof hardware secrets that are used to sign a report attesting to the enclave identity and hash of the code inside, users can verify that only a code they know and trust will be executed on their data.

This way you can make sure that the open-source version of BlindAI is loaded because you will be able to verify the hash of the code inside the enclave.

The workflow to make sure our solution is secure is to:

- Inspect the open source code of our server BlindAI, and make sure that data is not exposed. If you don’t want to, it's ok, we will have external independent auditors do it for you.

- Compile the open source code of BlindAI, and generate the hash of the enclave.

- When using our solution, make sure that the hash you computed before, from a trusted code, is indeed loaded inside the enclave. If you don’t measure the hash generated from our open-source code, don’t send anything!

III – BlindAI Cloud in action

Enough talk, let’s see how BlindAI Cloud works in practice! We will see how we can deploy a ResNet18 model with the new Cloud solution. You can find the code to run this on this Google Colab notebook.

For those of you who have used BlindAI before, the default choice will now be to contact the Mithril Cloud, but you can still deploy on-premise by providing the address of the server you set up.

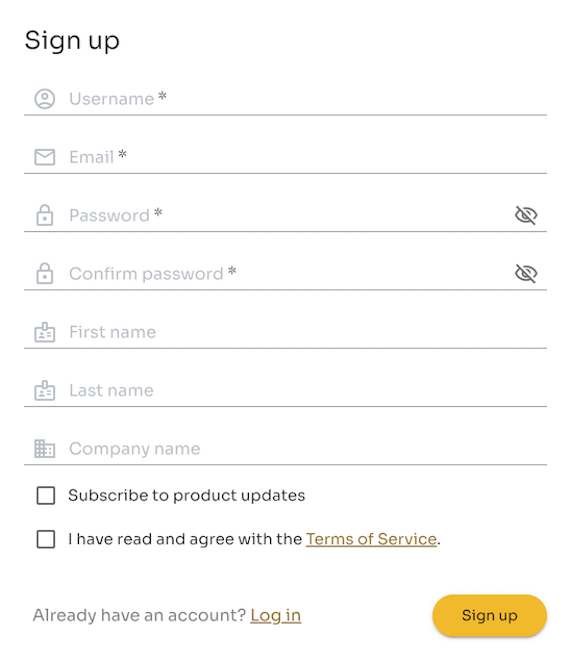

A - Register on Mithril Cloud

Querying models can be done without signing up, but uploading a model will require you to be registered on Mithril Cloud. First, go there and register.

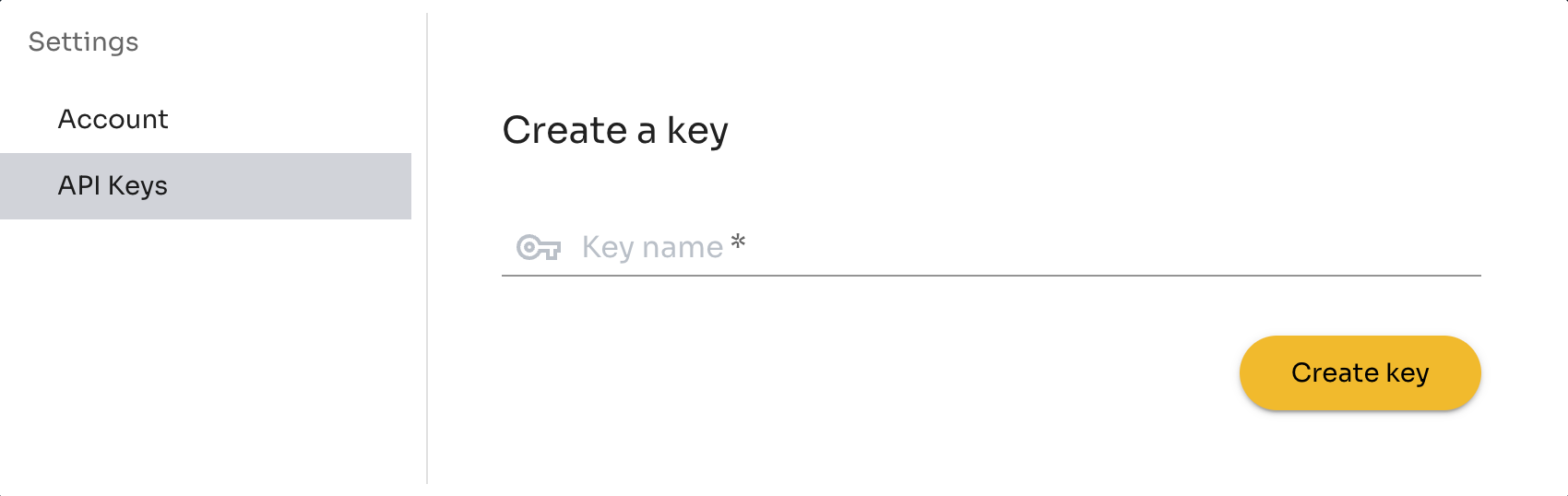

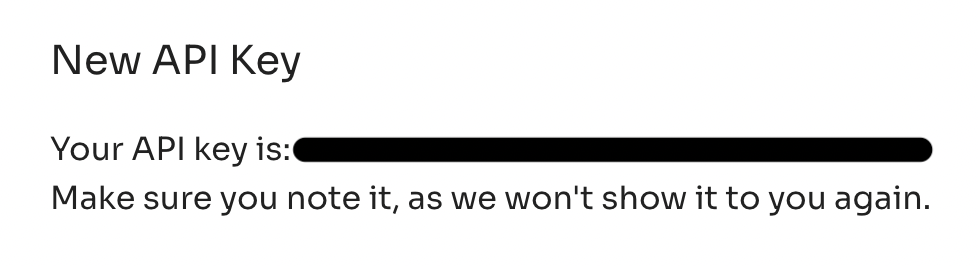

Once you are registered, go to the Settings page and create an API key to query our Cloud.

Please write down your API key somewhere and don’t lose it, otherwise, you will have to create another one!

Once you have your API key, we can move to the next step: install our library before uploading your model.

B - Install BlindAI

You will need our Python SDK to upload a model and query it securely.

You can install it using PyPI with:

pip install blindaiInstall BlindAI

Or you can build it from the source using our repository.

C - Upload your model

First, we will need to fetch a ResNet18 model from PyTorch Hub and export it to ONNX. ONNX is a standard format to deploy AI models. Models trained using PyTorch or Tensorflow can easily be exported to ONNX.

We will follow the steps from PyTorch Hub to get the model and query it.

import torch

model = torch.hub.load('pytorch/vision:v0.10.0', 'resnet18', pretrained=True)

# We need to provide an example of input with the right shape to export

dummy_inputs = torch.zeros(1,3,224,224)

torch.onnx.export(model, dummy_inputs, "resnet18.onnx")You will need to provide the API key you generated before uploading your model.

You might get an error if the name you want to use is already taken, as models are uniquely identified by their model_id. We will implement a namespace soon to avoid that. Meanwhile, you will have to choose a unique ID. We provide an example below to upload your model with a unique name:

import blindai

import uuid

api_key = "YOUR_API_KEY" # Enter your API key here

model_id = "resnet18-" + str(uuid.uuid4())

# Upload the ONNX file to the remote enclave

with blindai.Connection(api_key=api_key) as client:

response = client.upload_model("resnet18.onnx", model_id=model_id)Once done, our model is now uploaded inside a secure enclave!

All further requests made to it will be done with end-to-end protection, meaning the data sent is not exposed to any third party.

D - Query your model

First, we will grab an example image, here a Samoyan dog, and preprocess it.

from torchvision import transforms

import urllib

from PIL import Image

# Download an example image from the pytorch website

url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

try: urllib.URLopener().retrieve(url, filename)

except: urllib.request.urlretrieve(url, filename)

# sample execution (requires torchvision)

input_image = Image.open(filename)

preprocess = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])

input_tensor = preprocess(input_image)

input_batch = input_tensor.unsqueeze(0) # create a mini-batch as expected by the modelNow we just need to query our model with it:

import blindai

import torch

with blindai.Connection(api_key=api_key) as client:

# Send data to the ResNet18 model

prediction = client.predict(model_id, input_batch)Finally, we can post-process the softmax probabilities to know what output our ResNet18 predicted:

import requests

response = requests.get("https://git.io/JJkYN")

labels = response.text.split("\n")

output = response.output[0].as_torch()

probabilities = torch.nn.functional.softmax(output)

labels[probabilities.argmax().item()], probabilities.max().item()

>> ('Samoyed', 0.9001086950302124)Et voilà! We have been able to upload a model inside a secure enclave and query it securely with end-to-end protection, all from a Python interface!

Conclusion

We have seen in this article why we thought it was key to lower the barriers of entry of Confidential AI, and how BlindAI Cloud helps achieve it.

We hope you liked this article! If you are interested in Privacy and AI, do not hesitate to drop a star on our GitHub and chat with us on our Discord!