Insights of Portingbuild a Privacy-By-Design Voice Assistant With BlindAI.

Discover how BlindAI can make AI voice assistant privacy-friendly!

We will see in this article how AI could answer the challenges of smart voice assistants, and how the privacy issues associated with the deployment of such AI can be overcome with BlindAI.

In a Nutshell:

1. There’re challenges of deploying AI in the cloud due to privacy concerns and explains how BlindAI uses secure enclaves to guarantee data protection.

2. We present a tutorial on deploying a state-of-the-art speech-to-text model, Wav2vec2, using BlindAI.

Introduction

One of the most sought-after goals of AI is to develop a machine able to understand us, interact with us, and provide support in our everyday life just like in the movie Her.

But where are we currently? We all remember the Google Duplex conference where we were promised a lifelike AI assistant, but such a companion still seems far away today.

One of the key components of a real conversational AI is speech. Translating human speech into language is extremely hard. Nonetheless, recent approaches based on large-scale unsupervised learning with Transformers-based models, like Wav2vec2, have opened new horizons. Such models can be trained on huge amounts of unlabelled data, break down sounds into small tokens, and leverage them with attention mechanisms.

Unfortunately, those approaches, relying on mountains of data to be trained, have one hidden cost: privacy.

Indeed, we have seen in the past that GAFAM’s voice assistant solutions have had privacy issues, with much more data being recorded than announced. A lot of sensitive conversations had been recorded and used without people’s knowledge, causing a massive uproar.

In view of these privacy breaches, should we refrain from developing speech recognition AIs with life-changing potential? Do we have to choose between privacy and convenience?

At Mithril Security, we believe that there is a third way: democratize privacy-friendly AI to help improve AI systems without compromising on privacy. That is why we have built BlindAI, an open-source and privacy-friendly solution to deploy AI models with end-to-end protection.

We will see in this article how a state-of-the-art Speech-To-Text (STT) model, Wav2Vec2, can be deployed so that users can leverage AI without worrying that their conversation could be heard by anyone else.

I - Use case

A - Deep Learning for Speech-To-Text

We will see a concrete example of when and how to use BlindAI.

Let’s imagine you have a startup that has developed a state-of-the-art speech-to-text solution, to facilitate the transcription of medical exchanges between a patient and her therapist.

Enormous amounts of information come out during a therapy session, so it can never be fully transcribed and much of it gets lost. It can then become quite frustrating for patients to realize that part of their sessions is forgotten or never put down on paper, because their therapists did not have time to write down everything. Recording everything can be quite challenging for them as they must handle multiple 1-hour long sessions all day long.

Therefore, the startup proposes a Speech-To-Text AI solution, which would enable the therapist to focus less on note-taking, create much more reliable data that can be leveraged for research, and help patients get better care.

B - Deployment challenge

One natural way for the startup to deploy their AI for therapy transcription is through a Cloud Solution Provider. Indeed, providing all the hardware and software components to deploy this solution is quite complex and costly, and not necessarily the main focus of the startup.

In addition, deploying their AI as a Service makes it easy to integrate with existing solutions, and facilitates onboarding as little is asked of therapists.

Nonetheless, deploying such a solution in the Cloud creates privacy and security challenges. Respecting session confidentiality is key to maintaining patient-doctor privilege but it is hard to provide guarantees that therapy sessions are never accessed.

Malicious insiders, such as a rogue admin of the startup or the cloud provider, or even a hacker having internal access, could all compromise this extremely sensitive data.

Because of these non-negligible risks and the lack of privacy-by-design AI production tools, deploying this AI for therapy sessions in the Cloud can become highly complex.

C - Confidential AI deployment

However, by using BlindAI, voice recordings of sessions can be sent to be transcribed by an AI in the Cloud, without ever being revealed in clear by anyone else. By leveraging secure enclaves, it becomes possible to guarantee data protection from end-to-end, even when we send data to a third party hosted in the Cloud.

We see in the above scheme how regular solutions could expose session recordings to the Cloud provider or the startup, and how BlindAI can provide a secure environment making data always protected.

We cover the threat model and the protections provided by secure enclaves in our article Confidential Computing Explained, part 3: data in use protection.

The main idea is that secure enclaves provide a trusted execution environment, enabling people to send sensitive data to a remote environment, while not risking exposing their data.

This is done through isolation and memory encryption of enclave contents by the CPU. By sending data to this secure environment to be analyzed, patients and therapists can have guarantees that their data is not accessible neither by the Cloud Provider nor the AI company, thanks to the hardware protection.

Therefore, both can benefit from a state-of-the-art service without going through complex deployment on-premise and adopt a solution that they do not need to maintain themselves. All this, while keeping a high level of data protection as their data is not exposed to the Service Provider or the Cloud Provider.

II - Deployment of confidential voice transcription with BlindAI using Wav2vec2

In this tutorial, we propose a quick start with the deployment of a state-of-the-art model, Wav2vec2 for speech-to-text, with confidentiality guarantees, using BlindAI.

To reproduce this article, we provide a Colab notebook for you to try.

In this article, we'll be using the managed Mithril Cloud as our BlindAI server, which means you don't have to launch anything locally.

A - Install BlindAI

You will need our Python SDK to upload a model and query it securely.

You can install it using PyPI with:

pip install blindaiInstall BlindAI

or you can build it from source using our repository.

B - Deploy your server

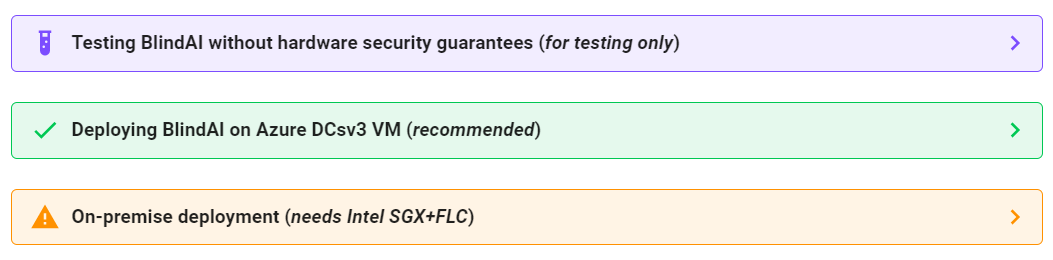

There are 3 different possible deployment methods. This documentation could be helpful for your reference.

C - Upload the model

Because BlindAI only accepts AI models exported in ONNX format, we will first need to convert the Wav2vec2 model into ONNX. ONNX is a standard format to represent AI models before shipping them into production. Pytorch and Tensorflow models can easily be converted into ONNX.

Step 1: Prepare the Wav2vec2 model

We will load the Wav2vec2 model using the Hugging Face transformers library.

from transformers import Wav2Vec2Processor, Wav2Vec2ForCTC

import torch

# load model and processor

processor = Wav2Vec2Processor.from_pretrained("facebook/wav2vec2-base-960h")

model = Wav2Vec2ForCTC.from_pretrained("facebook/wav2vec2-base-960h")Load model and processor for Wav2vec2

In order to facilitate the deployment, we will add the post-processing directly to the full model. This way the client will not have to do the post-processing.

import torch.nn as nn

# Let's embed the post-processing phase with argmax inside our model

class ArgmaxLayer(nn.Module):

def __init__(self):

super(ArgmaxLayer, self).__init__()

def forward(self, outputs):

return torch.argmax(outputs.logits, dim = -1)

final_layer = ArgmaxLayer()

# Finally we concatenate everything

full_model = nn.Sequential(model, final_layer)Add postprocessing to the model we will export

We can download a hello world audio file to be used as an example. Let's download it.

wget https://github.com/mithril-security/blindai/raw/master/examples/wav2vec2/hello_world.wavGet "Hello world" audio sample

We will need the librosa library to load the wav hello world file before tokenizing it.

import librosa

audio, rate = librosa.load("hello_world.wav", sr = 16000)

# Tokenize sampled audio to input into model

input_values = processor(audio, sampling_rate=rate, return_tensors="pt", padding="longest").input_valuesLoad and preprocess audio file

We can then see the Wav2vec2 model in action:

>>> predicted_ids = full_model(input_values)

>>> transcription = processor.batch_decode(predicted_ids)

>>> transcription

['HELLO WORLD']Inference result

Step 2: Export the model

Now we can export the model in ONNX format so that we can feed later the ONNX to our BlindAI server.

torch.onnx.export(

full_model,

input_values,

'wav2vec2_hello_world.onnx',

export_params=True,

opset_version = 11)Export to ONNX file

Step 3: Upload the model

You will need to provide the API key you generated before uploading your model.

You might get an error if the name you want to use is already taken, as models are uniquely identified by their model_id. We will implement a namespace soon to avoid that. Meanwhile, you will have to choose a unique ID. We provide an example below to upload your model with a unique name:

import blindai

import uuid

api_key = "YOUR_API_KEY" # Enter your API key here

model_id = "wav2vec2-" + str(uuid.uuid4())

# Upload the ONNX file to the remote enclave

with blindai.connect(api_key=api_key) as client:

response = client.upload_model("wav2vec2_hello_world.onnx", model_id=model_id)D - Get a prediction

Now it's time to check it's working live!

As previously, we will need to preprocess the hello world audio, before sending it for analysis by the Wav2vec2 model inside the enclave.

First, we prepare our input data, the hello world audio file.

from transformers import Wav2Vec2Processor, Wav2Vec2ForCTC

import torch

import librosa

# load model and processor

processor = Wav2Vec2Processor.from_pretrained("facebook/wav2vec2-base-960h")

audio, rate = librosa.load("hello_world.wav", sr = 16000)

# Tokenize sampled audio to input into model

input_values = processor(audio, sampling_rate=rate, return_tensors="pt", padding="longest").input_valuesLoading and preprocessing of audio file

Now we can send it to the enclave.

import blindai

with blindai.connect() as client:

response = client.predict(model_id, input_values)Sending data to BlindAI for confidential prediction

We can reconstruct the output now:

>>> processor.batch_decode(response.output[0].as_torch().unsqueeze(0))

['HELLO WORLD']Response decoding

Conclusion

Et voila! We have been able to apply a start of the art model of speech recognition, without ever having to show the data in clear to the people operating the service!

If you have liked this example, do not hesitate to drop a star on our GitHub and chat with us on our Discord!