How to Prevent Cheating in AI Tests

Cheating AI test methods include by swapping models or overfitting on known test sets. A solution to AI test cheat would be a secure infrastructure for verifiable tests that protects the confidentiality of model weights and test data.

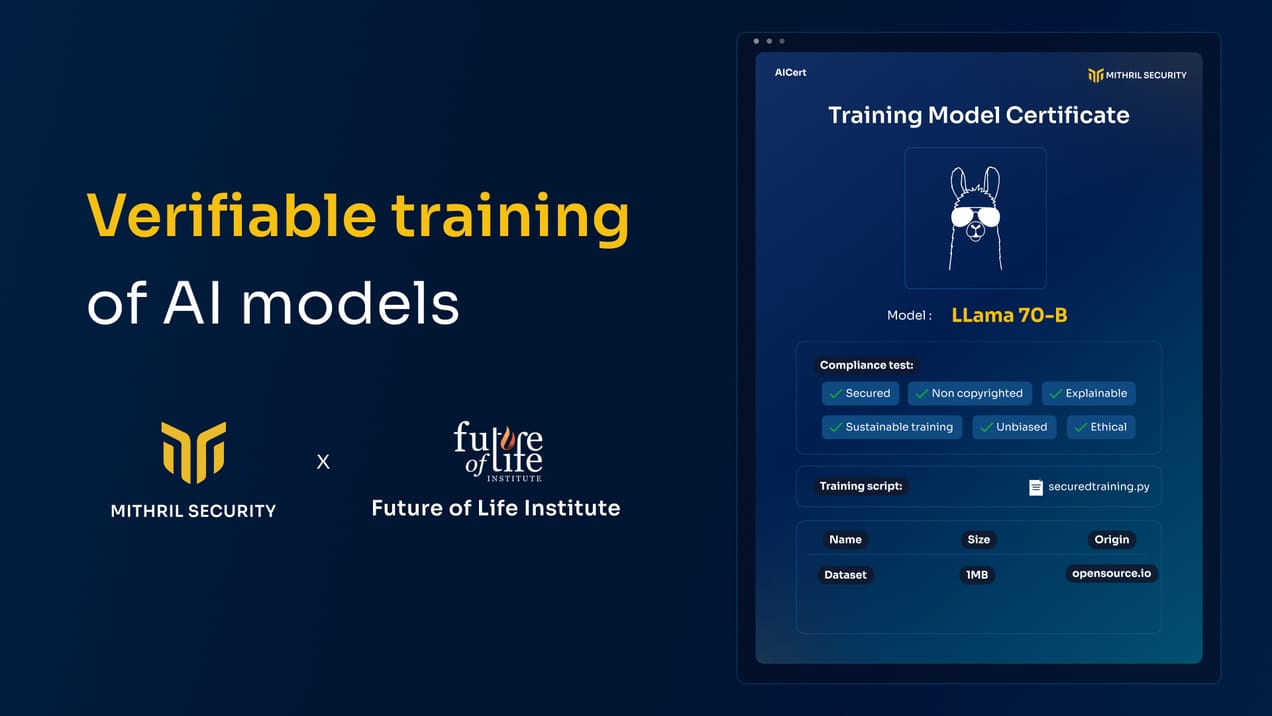

AICert v1.0 - Open-Source AI Traceability Tool for Verifiable Training

A year ago, Mithril Security exposed the risk of AI model manipulation with PoisonGPT. To solve this, we developed AICert, a cryptographic tool that creates tamper-proof model cards, ensuring transparency and detecting unauthorized changes in AI models.

Choosing your GPU stack to deploy Confidential AI

In this article, we provide you with a few hints on how to choose your stack to build a confidential AI workload leveraging GPUs. This protection is meant to safeguard data privacy and model weights confidentiality.

Mithril Security Summer Update: Progress and Future Plans

Mithril Security's latest update outlines advancements in confidential AI deployment, emphasizing innovations in data privacy, model integrity, and governance for enhanced security and transparency in AI technologies.

Apple Announcing Private Cloud Compute

Apple has announced Private Cloud Compute (PCC), which uses Confidential Computing to ensure user data privacy in cloud AI processing, setting a new standard in data security.

BlindChat - Our Confidential AI Assistant

Introducing BlindChat, a confidential AI assistant prioritizing user privacy through secure enclaves. Learn how it addresses data security concerns in AI applications.

Mithril X Tramscribe: Confidential LLMs for Medical Voice Notes Analysis

How we partnered with Tramscribe to leverage LLMs deal with Medical voice notes analysis

Mithril x Avian: Zero Trust Digital Forensics and eDiscovery

How we partnered with Avian to deploy sensitive Forensic services thanks to Zero Trust Elastic search.

Deploy Zero-trust Diagnostic Assistant for Hospitals

Improving Hospital Diagnoses: How BlindAI and BastionAI Could Assist

What To Expect From the EU AI Regulation?

A view on the key upcoming EU regulations, and how these are likely to affect data and AI industry practices.