How to Prevent Cheating in AI Tests

Cheating AI test methods include by swapping models or overfitting on known test sets. A solution to AI test cheat would be a secure infrastructure for verifiable tests that protects the confidentiality of model weights and test data.

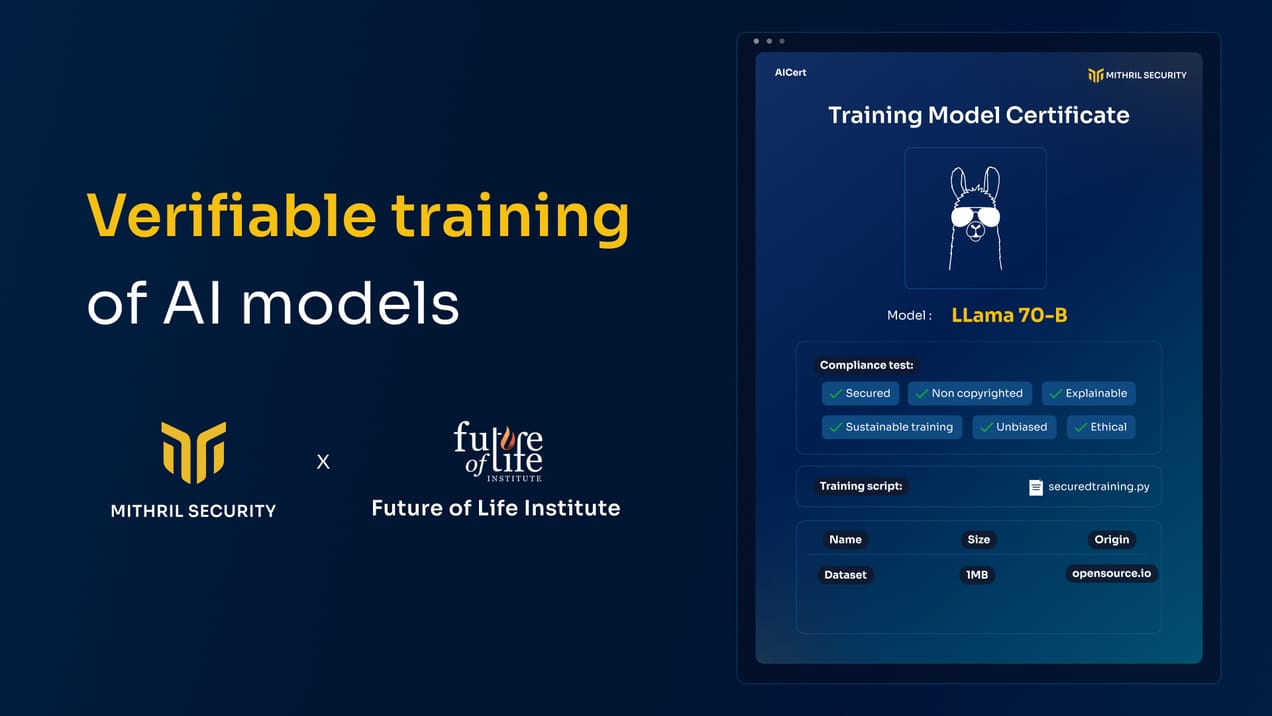

AICert v1.0 - Open-Source AI Traceability Tool for Verifiable Training

A year ago, Mithril Security exposed the risk of AI model manipulation with PoisonGPT. To solve this, we developed AICert, a cryptographic tool that creates tamper-proof model cards, ensuring transparency and detecting unauthorized changes in AI models.

Another Intel SGX Security Flaw? Our Analysis of the SGX Fuse Key Extraction Claim

A recent discovery reveals a weakness in older Intel CPUs affecting SGX security. Despite the alarm, the extracted keys are encrypted and unusable. Dive in to learn more.

Choosing your GPU stack to deploy Confidential AI

In this article, we provide you with a few hints on how to choose your stack to build a confidential AI workload leveraging GPUs. This protection is meant to safeguard data privacy and model weights confidentiality.

Mithril Security Summer Update: Progress and Future Plans

Mithril Security's latest update outlines advancements in confidential AI deployment, emphasizing innovations in data privacy, model integrity, and governance for enhanced security and transparency in AI technologies.

Apple Announcing Private Cloud Compute

Apple has announced Private Cloud Compute (PCC), which uses Confidential Computing to ensure user data privacy in cloud AI processing, setting a new standard in data security.

Mithril Security is supported by OpenAI Cybersecurity Grant to build Confidential AI.

Mithril Security has been awarded a grant from the OpenAI Cybersecurity Grant Program. This grant will fund our work on developing open-source tooling to deploy AI models on GPUs with Trusted Platform Modules (TPMs) while ensuring data confidentiality and providing full code integrity.

Technical collaboration with the Future of Life Institute: developing hardware-backed AI governance tools

The article unveils AIGovTool, a collaboration between the Future of Life Institute and Mithril, employing Intel SGX enclaves for secure AI deployment. It addresses concerns of misuse by enforcing governance policies, ensuring protected model weights, and controlled consumption.

BlindChat - Our Confidential AI Assistant

Introducing BlindChat, a confidential AI assistant prioritizing user privacy through secure enclaves. Learn how it addresses data security concerns in AI applications.

Privacy Risks of LLM Fine-Tuning

This article explores privacy risks in using large language models (LLMs) for AI applications. It focuses on the dangers of data exposure to third-party providers during fine-tuning and the potential disclosure of private information through LLM responses.

Our Journey To Democratize Confidential AI

This article provides insights into Mithril Security's journey to make AI more trustworthy and their perspective on addressing privacy concerns in the world of AI, along with their vision for the future.

Our Roadmap for Privacy-First Conversational AI

In September 2023, we released the first version of BlindChat, our confidential Conversational AI. We were delighted with the collective response to the launch: BlindChat has been gaining traction on Hugging Face over the past few weeks, and we've had more visitors than ever before But this local,