Mithril Security Summer Update: Progress and Future Plans

Mithril Security's latest update outlines advancements in confidential AI deployment, emphasizing innovations in data privacy, model integrity, and governance for enhanced security and transparency in AI technologies.

At Mithril Security, we have been dedicated since 2021 to creating open-source frameworks for deploying private and secure AI using Confidential Computing. This summer review provides a comprehensive overview of our achievements and a preview of our upcoming projects.

TLDR :

- Confidential AI Inference: Data breaches and privacy concerns necessitate secure AI processing. We developed BlindAI to ensure data privacy when interacting with AI models, making it easier for businesses to adopt AI securely. With the redesign for GPU compatibility, BlindLlama now enables the use of advanced AI models without compromising data confidentiality.

- AI Traceability: Verifying the integrity and source of AI models is essential to prevent misuse and ensure security. AICert offers a robust solution to ensure the integrity and transparency of AI models. By providing cryptographic proofs of model provenance, AICert helps companies verify that their AI models are built on trustworthy data and enhance overall security.

- AI Model Governance: Effective control over AI model usage is crucial for protecting intellectual property and preventing misuse. AIGovTool allows AI providers to enforce usage rules and protect their models' confidentiality. This tool offers peace of mind by ensuring that AI models are used as intended, with robust safeguards against misuse and reverse engineering, thereby protecting valuable intellectual property.

Since our inception in 2021, we've been dedicated to enhancing AI safety through secure enclaves. These hardware-based environments ensure data confidentiality and code integrity. Our passion for AI and privacy and our commitment to open-source principles drive our vision of safer AI deployment. As we approach the summer holidays, we want to provide a comprehensive overview of our projects over the past three years and outline what to expect from us in the next six months.

Confidential AI inference

We founded Mithril Security in Paris with a conviction: the AI adoption was hindered by a dilemma between self-hosting AI models (safe but costly and complex) or using cloud-based AI models and risking data privacy. We aimed to offer a way out of the dilemma by developing a Confidential AI inference framework that protects users' data during analysis by AI models. We were convinced enclaves were the only technology flexible enough and battle-tested enough to create a Confidential AI solution combining good performance and flexibility (you can learn more about it if you want to find out more about confidential computing in our detailed guide). This led us to develop the first open-source framework for AI inference leveraging Intel SGX secure hardware.

Blind AI - Confidential AI inference with Intel SGX

BlindAI enables the protection of both data and models to guarantee the privacy of data sent to an AI provider or the protection of the weights if deployed on-premise. This deployment solution leverages Intel SGX secure hardware to deploy models on secure enclaves. You can learn more about how we built BlindAI in our initial blog post (Already 2 years ago... time flies!)

In 2023, Quarkslab conducted an audit of BlindAI and found no vulnerabilities. This in-depth review by experts confirms the security of BlindAI.

We are quite proud of this project, but Intel SGX support means it is only compatible with CPUs. The lack of GPU compatibility makes it quite unrealistic to use it with LLMs like GPTs, PaLM 2, or Claude.

BlindLlama - Confidential AI inference with GPUs

This is why we redesigned our architecture from scratch to better support LLM with GPU compatibility. We started this move one year ago. At this time, there was no GPU with a Confidential Computing option from Nvidia available. We had to find a workaround. Our whitepaper details how we did it and built our first Confidential AI inference framework compatible with GPU, utilizing vTPM as a root of trust for GPU-based deployments.

BlindLlama is an open-source tool for deploying AI models on GPUs with secure enclaves based on Trusted Platform Modules (TPMs) while ensuring data confidentiality and providing full code integrity. This project aims to prove that data can be sent to AI providers without any data exposure, not even to the AI provider’s admins.

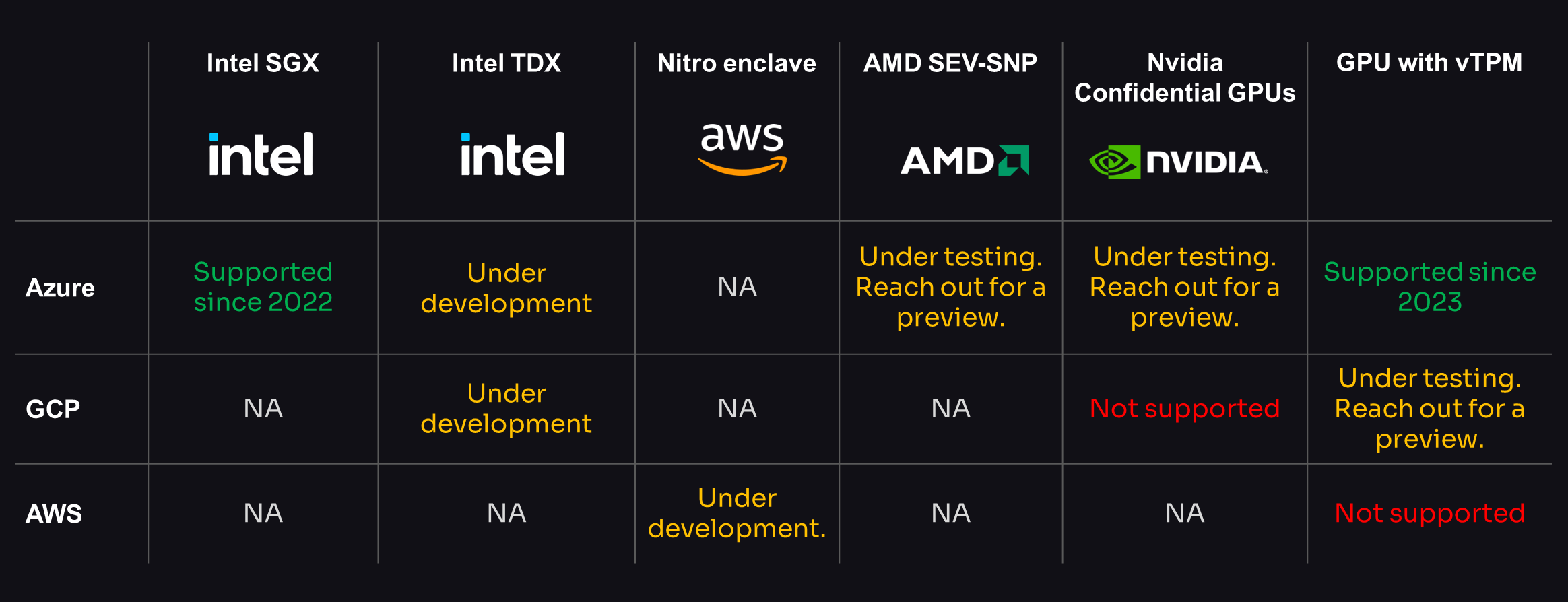

The current version of BlindLlama is only compatible with Azure. Our main priority today is expanding its compatibility. This work is about to be done; we are in the final testing phase.

To complement BlindLlama, we developed BlindChat, a confidential AI assistant. BlindChat serves as the front end for this confidential AI inference framework. Soon, we'll release a V2 with inspectable attestation (Sign up to be sure you get pinged). You can learn more about BlindChat in our article or explore the project here.

We were very proud to have OpenAI support the development of a part of this project.

Limitations & future development:

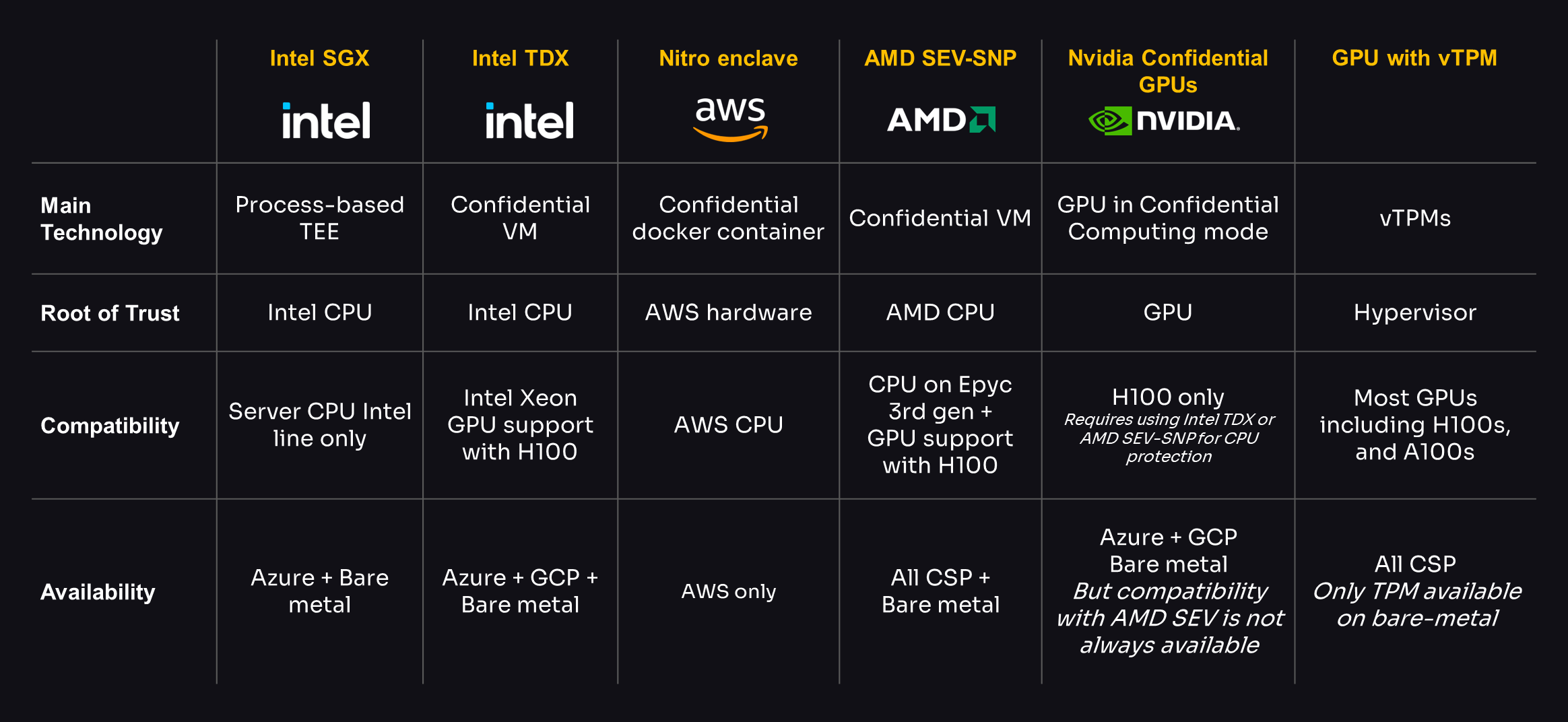

Here is a compatibility matrix for deploying our Confidential AI stack in a cloud environment, followed by comparing the main hardware for building enclaves.

This overview focuses on cloud deployment. Tailored solutions can be provided for on-premise deployments or other cloud providers. Please contact us if you want to explore how our stack can be implemented on your servers.

AI traceability

One year ago, we highlighted an underestimated AI risk: AI model poisoning. With PoisonGPT, we showed how easily a malicious engineer can poison a model and spread misinformation. This issue persists, as demonstrated by Anthropic's "The Sleeper Agents," where a model trained to behave compliantly initially can shift to undesirable actions later. The lack of AI model traceability—proving a model's training set and algorithm—remains a significant challenge.

In response, we developed a solution to prevent such supply chain attacks:

AICert

AICert aims to make AI models traceable and transparent, allowing AI model builders to create certificates with cryptographic proofs linking model weights to specific training data and code. This ensures models originate from verified sources, addressing copyright, security, and safety issues. AICert uses Trusted Platform Modules (TPMs) to attest the entire stack used in model production, offering non-forgeable proofs of model provenance. When the model is in production, end users can verify these AI certificates to ensure that the models originate from specific training sets and codes, mitigating copyright, security, and safety issues.

AICert helps AI builders prove their models were not trained on copyrighted, biased, or non-consensual PII data, provides an AI Bill of Materials to prevent model poisoning, and offers a strong audit trail for compliance and transparency.

You can discover this open-source project here.

AICert was designed in partnership with the Future of Life Institute, a leading NGO advocating for AI system safety.

Limitations & future development:

- AICert currently supports only AI fine-tuning, not general AI training. AICert, in its current version, can only be used for AI fine-tuning and not for general AI training.

- AICert does not do any audit but only logs the stack. Note that while AICert ensures traceability, it does not audit the training code or data for backdoors or threats. Full trustworthiness requires scrutiny of both AICert and the AI builder's code and data.

- Input data is not protected: We aim to release a feature for AI confidential fine-tuning in the next 12 months, adding input confidentiality to AICert. This development will solve the input privacy in fine-tuning problem we discussed last year.

AI model governance

The rise of large language models (LLMs) has highlighted their potential for misuse, such as spreading misinformation or conducting cyberattacks. Additionally, powerful models risk being reverse-engineered by competitors or malicious entities.

Currently, if an AI provider supplies a model to an enterprise client who deploys it on their own infrastructure, the provider has no way of knowing if the model is being misused or if the weights are being extracted. Our enclave stack allows us to protect not only the input data but also the model weights, leading us to develop a form of AIDRM.

AIGovTool is an open-source project that enforces AI model governance rules, such as consumption control, rate limiting, and off-switch triggers. This framework enables AI providers to lease models deployed on the client’s infrastructure, ensuring hardware guarantees and trusted telemetry for secure model use.

In the second half of 2023, we developed the first version of AIGovTool. It is a framework allowing the AI custodian to lease a model deployed on the infrastructure of the enterprise client while having hardware guarantees that the weights are protected. Trustable telemetry for consumption and off-switch is enforced with an enclave based on IntelSGX. You can learn more about this project in this article.

Limitations & future development:

Currently, we are at the proof-of-concept stage, and it only runs on SGX, so it is compatible only with CPUs. Significant product improvement is planned for 2025, but you can reach out now if you want to access early development. Among those improvements includes:

- making it compatible with all GPUs covered by our Confidential AI inference stack by the end of the year

- multi-party model ownership

- slow-down switch features

Subscribe to our blog updates if you want to be kept informed with the latest news about the future developments we proposed here and some ongoing new contracts we will disclose this summer.